Hello, this is Chie Furusawa from Dwango Media Village. In this article, I will introduce a demo and method for specifying 3D character model poses by drawing stick figures. If you’d like, please try the demo below first. The method involves sketch search and 3D pose estimation from still images using machine learning. For those interested in the method details, please read on.

This demo is no longer available.

Of the three candidate poses displayed, the leftmost pose will be applied. (The second and third candidates are displayed only and cannot be selected.)

Drawing a stick figure on the canvas on the right will make the character take the pose closest to your sketch. The three stick figures displayed at the top left are the closest poses (stick figure images) from the left. There are also buttons to change the background, allowing you to create images with poses that blend into different scenes.

| UI | Description |

|---|---|

| Resets the sketch. |

| Adds a second character. Press again to revert to one character. The pose update applies to the last added character. |

| Toggles the visibility of the drawn sketch. |

| Adds a background image. Click to change the background. |

| The candidate poses estimated from the sketch. The leftmost pose is the closest estimated pose, which the character takes. The poses are arranged in decreasing order of similarity. |

You can experience how the character follows the position and size of the sketch and how the pose changes with each stroke.

You can create images like these that match the background.

The images and character models used are from the following sites. Thank you very much.

By being able to manipulate the poses of 3D character models, various applications that use characters as a key interaction point can be developed. The pose of a 3D character model can be manipulated by specifying information about the model’s joints. Motion capture and Kinect are representative technologies for obtaining 3D joint information. However, we wondered if it was possible to specify character poses using simpler inputs without special equipment like motion capture.

One example of a “simpler input” is full-body images obtained from mobile or web cameras [3dposemobile]. Last year, Dwango Media Village conducted research on 3D pose estimation without using 3D datasets for training [3dpose]. Using this research, 3D joint coordinates can be obtained from still images. In the demo above, this research is used to estimate 3D joint coordinates from hand-drawn stick figures, allowing characters to take poses. As shown in these examples, obtaining 3D joint coordinates from inputs that can be obtained without special equipment, such as still images from smartphones or web cameras or hand-drawn stick figures, is important for allowing more people to experience specifying 3D character poses.

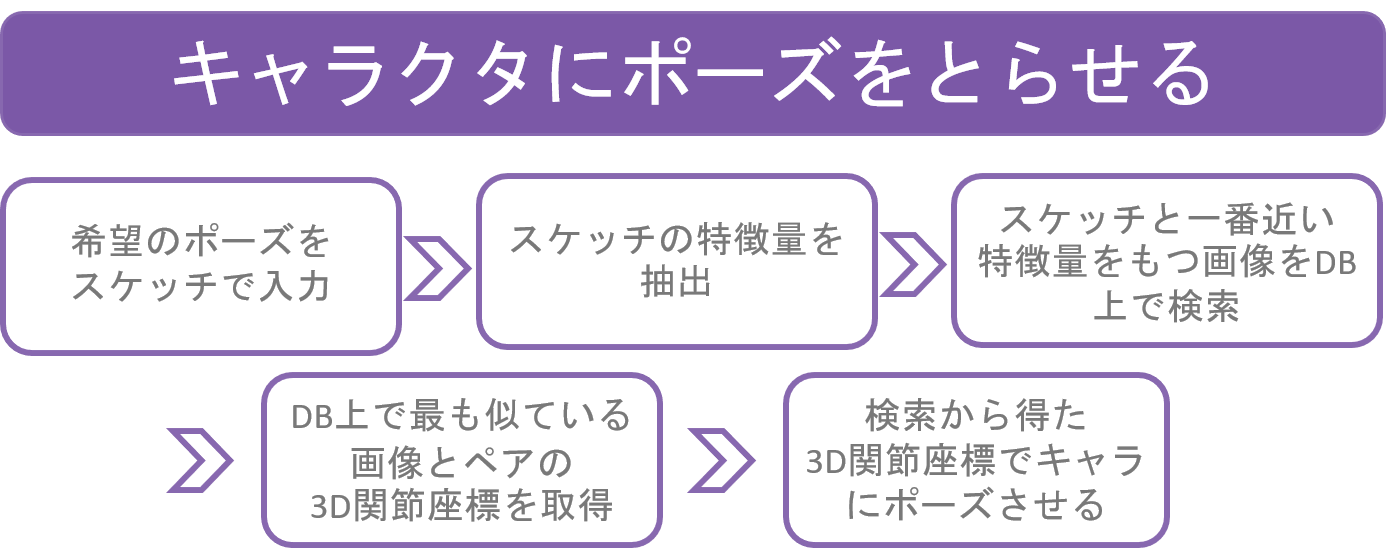

In this demo, 3D joint coordinates are estimated using images of hand-drawn stick figures. The process for specifying 3D character poses from drawn stick figures is as follows. In this demo, to find the desired pose from the stick figure, we use a method that compares the image features of hand-drawn stick figures with those of stick figures linked to 3D poses in a database to find the most similar pose.

The image features were obtained using FMEOH from [Sketch-based Manga Retrieval]. We calculate the image features of “artificial stick figure” images placed in the database and the user-drawn “hand-drawn stick figure” images using this feature.

The generation of “artificial stick figure” images and the estimation of their paired 3D joint coordinates are done using the 3D pose estimation research of [3dpose] from the same full-body image. First, 3D poses are estimated from full-body images using a trained model. The image generated by drawing joint positions based on the estimated 3D joint coordinates is the “artificial stick figure” image. These images, paired with the estimated 3D joint coordinates, form one data point in the database used in this method. While “artificial stick figure” images can be drawn from arbitrary angles based on the estimated 3D joint coordinates, in this demo, we created data only from the angle of the input still image. The poses used in the demo are selected from about 260 data points in the database.

By combining research on image similarity measurement and 3D pose estimation from still images, we realized the flow of “sketching a stick figure → estimating the 3D pose closest to the stick figure → applying the estimated 3D pose to the character.” By using search, we can prevent taking broken poses and reduce the time needed for 3D pose estimation compared to directly estimating 3D poses from stick figures.

In the introduction to the previous section, I mentioned that specifying a pose requires obtaining 3D joint information. Here, I will briefly touch on how to control the joints of a 3D character using the obtained 3D joint information. The method of determining a posture by specifying the 3D coordinates of joints is called inverse kinematics (IK). Conversely, determining a posture by specifying joint angles is called forward kinematics (FK). This time, since we want to specify poses using the estimated 3D joint coordinates, we used a package or library that can move the character’s joints to the specified positions using IK. One such package is Final IK in Unity. In this demo, we use Final IK to specify the character’s poses.

Finally, I’d like to touch on why we considered this kind of application. Recently, various apps applying 3D joint coordinates estimated from still images have been emerging [Homecourt], [Michikon]. Using full-body images as inputs for entertainment applications seemed unsuitable for “content creation by individuals” because taking full-body selfies with a smartphone is difficult. To solve this problem, some methods use only upper body or face images as input. However, wanting to specify full-body poses for characters led me to develop a method that does not require full-body selfies. In the future, we might see various unexpected apps and content using estimated 3D joint coordinates from still images. I look forward to this and hope to contribute to the development of this field.

[3dpose] Yasunori Kudo, Keisuke Ogaki, Yusuke Matsui, and Yuri Odagiri. "Unsupervised Adversarial Learning of 3D Human Pose from 2D Joint Locations" arXiv https://arxiv.org/abs/1803.08244

[MangaRetrieval] Yusuke Matsui, Kota Ito, Yuji Aramaki, Azuma Fujimoto, Toru Ogawa, Toshihiko Yamasaki, and Kiyoharu Aizawa. "Sketch-based Manga Retrieval using Manga109 Dataset" Multimedia Tools and Applications (MTAP), Springer, 2017. http://yusukematsui.me/project/sketch2manga/sketch2manga.html

[Homecourt] HomeCourt - The Basketball App https://itunes.apple.com/jp/app/homecourt-the-basketball-app/id1258520424?mt=8

[Michikon] ミチコン-VisionPose Single3D- https://itunes.apple.com/jp/app/%E3%83%9F%E3%83%81%E3%82%B3%E3%83%B3-visionpose-single3d/id1461437373?mt=8

[3dposemobile] Androidだけでもアリシアちゃんになれちゃうアプリを作った話@第45回コンピュータビジョン勉強会