ASCII art, in the broadest sense, is any artwork created using text. It originally referred to art created using only the 95 printable ASCII characters. Today, with the wide selection of characters from various languages available to modern computers, the possibilities of ASCII art (or "AA", as Japanese net users call it) are almost endless.

Because ASCII art is created using the same characters as normal writing, it is non-trivial to automatically detect it. Further complicating matters is the fact that ASCII art and related textual art are found primarily on the Internet, which is full of unconventional use of language. Because language on the Internet is so unpredictable, and because the potential character set is so large, a rule-based system for detecting ASCII art would seem impractical.

This article introduces "AA-chan", a neural network for finding and identifying ASCII art, and three other text categories. It was trained on a multilabeling task using comments from the Japanese language version of the Niconico video sharing service.

After loading the model, input any text you wish to analyze. Please note that, because the model was trained on Japanese-language data, it will perform better on Japanese-style emoticons and text art. You can try the following search queries to find some Japanese-style text art:

Once analysis has finished and the results are shown, you can use the slider below the results to adjust the contrast, which will make the category with the highest predicted probability stand out.

AA

Kaomoji

Emoji

Text

Suzuki [1] proposed a method to extract spans of ASCII art from multiple lines of text. However, their method relies on features extracted from one or more full lines of text, which is not compatible with our goal of character-level prediction. Furthermore, in our task, the inputs are individual Niconico comments, which are each handled independently, not long text spans.

AA-chan was trained to detect four different text categories, which are defined as follows:

For the purposes of this task, those were the categories and definitions used. A fifth category, "Danmaku", was also defined, and used to label the screen-filling style of comments known by the same name among Niconico users. However, the category proved poorly defined in practice, and ended up unused in the final model.

::+。゚:゜゚。❤ ❤ ❤ ❤:*:+。::。;:: .:*:+;❤ ❤ ❤。゚:。*. 。゚:゜❤ ❤:゚。゚ 。 ゚、❤ ❤・*゚: *:+。゚’❤ ❤、+。゚:* ゚:゚*。:゚+:。❤ ❤。。゚:゜゚。*。 +。::。゚: +。:。゚+❤ ❤。゚:゚*:。:゚+:。*: :*゚。:。*:+。゚*:。:゚:*+。❤:*:゚:。:+゚。*:+。::。゚+:

□□□■□□□□□□□□□□□□□□■□■□□□□□□□□□□□□□□□■□□□□ ■■■■■■■■■□□■□□□□□■■□■□□■■■■■□□□□□□□□■□□□□ □□□■□□□□□□□■□□□□□■□□□□□□□□■□□□□□□□□□■□□□□ □□■■■■■■□□□■□■■■■■■■□□□□□■□□□□□□□□□□■■■■■ □■□■□□■□■□□■□□□□□■□□□□□□■■■■■□□□□□□□■□□□□ ■□□■□□■□□■□■□□□□□■□□□□□■□□□□□■□□□□□□■□□□□ ■□□■□■□□□■□■□□□□□■□□□□■□□□□□□□■□□□□□■□□□□ ■□□■□■□□□■□■□□□□□■□□□□□□□□□□□□■□□□□□■□□□□ ■□□■■□□□□■□■□□□□□■□□□□□□□■■□□□■□□■■■■■□□□ □■■■□□□□■□□■□□□□■□□□□□□□■□□■□■□□■□□□■□■■□ □□□□□■■■□□□□□□■■□□□□□□□□□■■■■□□□□■■■□□□□■

♫彡。.:・¤゚♫彡。.:・¤゚♫彡。.:・¤゚♫彡。.:・*゚ *・゚゚・*:.。..。.:*゚:*:・* ✩ * ・* ✩ * ・*゚・*:.。.*.:*・゚.:*・゚* ☆彡.。.:*・☆彡.。.:*・☆彡.。.:*・・* ✩ * ・* ✩ * ・* ☆彡.。.:*・☆彡.。.:*・☆彡.。.:*・゚・*:.。.*.:*・゚.:*・゚* ☆.。.:*・°☆.。.:*・°☆.。.:*・°☆.。.:*・°☆*:.. ゚*。:゚ .゚*。:゚ .゚*。:゚ .゚*。:゚ .゚*。:゚゚*。:゚ .゚*。:゚ .゚*。:゚ .゚*。:゚ .゚*。:゚ ☆.。.:*・°☆.。.:*・°☆.。.:*・°☆.。.:*・°☆*:.. ☆彡.。.:*・☆彡.。.:*・☆彡.。.:*・*・゚゚・*:.。..。.:*゚:*: ゚・*:.。.*.:*・゚.:*・゚*・* ✩ * ・* ✩ * ・**・゚゚・*:.。..。.:*゚:*: ☆.。.:*・°☆.。.:*・°☆.。.:*・°☆.。.:*・°☆*:.. ♫彡。.:・¤゚♫彡。.:・¤゚♫彡。.:・¤゚♫彡。.:・*゚

(`・∀・)

( *´ω`* )

ヾ(๑◕▽◕๑)ノ

💩

☂

🙄

Niconico video comments were used as the basis of the dataset.

Several hundred thousand comments were sampled from Niconico comment logs from 2007 to 2018. Each comment was labeled with one or more text category, as above. For instance, the comment:

キタワァ*・゜・*:.。..。.:*・゜(n‘∀‘)η゚・*:.。. .。.:*・゜・* !!

contains the text キタワァ, as well as the kaomoji (n‘∀‘)η, in addition to having decorative ASCII art in between,

so would thus be labeled aa,kaomoji,text. Although each comment was labeled based on the text categories they contained,

the location of each category within the comment was not specified.

By training the model to predict the labels at the comment level,

it was able to also learn to find the character-level positions of each label.

The dataset was created using bootstrapping, by first manually labeling a small amount of data, training a simple predictive model on that data, and using the trained model to semi-automatically label additional data. Instances the model was confident on were automatically assigned labels, while the remaining instances were manually corrected. This additional data was then used to train a better model, and so on and so forth, eventually resulting in a dataset of several hundred thousand labeled comments.

Due to an accident, the original data used to train the model was lost, but the reconstructed dataset is available for download below.

The above zip file contains two files: all_annotated.tsv, which contains the data, and README, which contains an explanation of the data (in Japanese).

The data format is as follows:

id group_id vpos command message label 0 sm8896-0 0 shita pink かーたぐーるまー text 1 sm8896-0 0 None うどんげは俺の嫁 text 2 sm8896-0 0 None されど鈴仙は俺の嫁 text 3 sm8896-1 0 None (´・ω・`)こんなえがかきたい。。。 kaomoji,text ...

Each row contains a unique ID, the body of the comment, the assigned label(s),

and several other attributes that did not end up being used in the AA-chan task. vpos is the video time (in centiseconds) at which the comment was made. command contains formatting options such as color and position. group_id is an identifier composed of the source video ID and a group

ID within that video. Because ASCII art in Niconico comments often

spans multiple comments, this group ID allows comments belonging

to the same artwork to be grouped together.

If you wish to make use of this column,

be aware that consecutive comments with identical label(s)

were automatically assigned the same group ID, thus unrelated

text comments that happened to be adjacent will also be grouped

together.

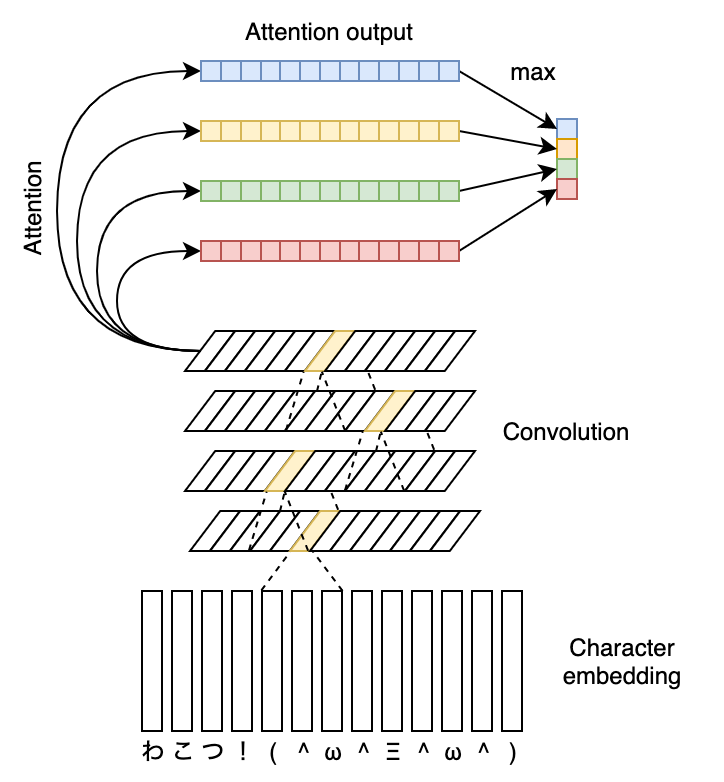

The architecture of AA-chan is as shown above. AA-chan makes use of the popular "attention" mechanism, but modified to suit the task.

Attention is generally used to weight the input units to part of a neural network. In the case of text, the units that make up the input may be words or characters. Of these, some words or characters may be more important than the others for helping the model to make its prediction.

An attention mechanism assigns higher weights to the units it believes are important, thus allowing the model to focus on them. The model is not explicitly taught which units are important, but rather implicitly learns to identify them as it solves the task it has been given.

In practice, this often results in weightings that make sense to humans. For instance, when a machine translation system produces "l'homme", it will assign the highest attention weights to "the man" in the source sentence [2]. Similarly, in order to predict the presence of a dog, an image analysis system may assign higher attention weights to the parts of an image where the dog is located [3].

However, not all attention outputs are as easily interpretable. Clark et al. [4] analyzed a language model that made use of many layers of attention, and while they found certain attention layers learned to detect specific grammatical phenomena, other layers put all their weight on seemingly unimportant punctuation, for reasons that the human researchers could only hypothesize about. Other work [5] has raised concerns about the interpretability of attention mechanisms and relying on them as an explanation for a model's behavior. After all, as long as its predictions are accurate, nothing is forcing a neural network to produce human-interpretable attention weights.

As such, AA-chan does not use attention output as weights. Instead, it essentially treats attention as prediction. A separate attention mechanism is provided for each label, and the character-level attention weights are taken to mean the probability that each character is assigned each label.

The model's training task is to assign labels to each comment, that is, predict which text category or categories are present in the comment. If the attention weights are interpreted as the character-level probabilities of each label, then they can also be used to directly compute a comment-level probability for the existence of that label. By using attention weights directly like this, a certain level of interpretability is ensured.

As with other attention mechanisms, it is left up to the model where to assign the attention weights. The hope is that, by learning to predict the existence of each label, it will also learn to predict their positions. You can test the results in the demo above. How does the model do? Is it able to find what parts of your input are what?

This article introduced AA-chan, a neural network for detecting and locating various text categories in a given text input. Although positional information was not included in the training data, the model was able to learn it using a modified attention mechanism. A reproduction of the data used is available for download, for anyone interested in running their own experiments.