This article is automatically translated.

Hello, this is Sasaki from Dwango Media Village (DMV). Deep learning, which involves finding parameters for large neural networks using massive data, requires significant computational cost. Generally, GPUs are used for acceleration, but even then, experiments can take several days to weeks.

In this article, I will introduce the results of our evaluation of speed improvement in deep learning using Tensor Processing Units (TPUs) as part of a strategic PoC (Proof of Concept) with Google.

TPUs are machine learning computation devices provided by Google. They offer higher computational power compared to GPUs, potentially drastically reducing training times. At DMV, we conduct training experiments using GPUs, but depending on the scale of the model and the amount of data, it can take several weeks. If we could significantly speed up experiments, we could try more hyperparameters and model structures. In this evaluation, we aimed to see how much speed improvement we could achieve by migrating the deep learning models we typically handle to a TPU environment.

TPUs can be used from Virtual Machines (VMs) provided on Google Colaboratory or Google Cloud. Google Colab allows for easy TPU environment setup, but for incremental development and code management, we opted to use VMs. As of spring 2022, there are two ways to use TPUs from a VM: “TPU Nodes” and “TPU VMs.” TPU Nodes use gRPC to connect to a separate TPU host VM, while TPU VMs connect directly to the TPU host VM, avoiding the need for gRPC and allowing faster communication. However, the specifications of TPU VMs (CPU and memory) are predetermined.

Calculations using TPUs are executed through a compiler specialized for linear algebra called XLA (Accelerated Linear Algebra). Python frameworks such as PyTorch, Jax, and TensorFlow provide interfaces to XLA. Since our implementation was initially in PyTorch, we used the XLA-supported package PyTorch XLA. Migrating from PyTorch to PyTorch XLA only involves replacing GPU devices (torch.device) with PyTorch XLA’s TPU devices. However, since XLA executes calculations asynchronously, explicit synchronization with the device is needed after steps like Optimizer.step. Additionally, a TPU has 8 cores (equivalent to torch devices) allowing for parallel computation, which requires additional implementation for device-process synchronization.

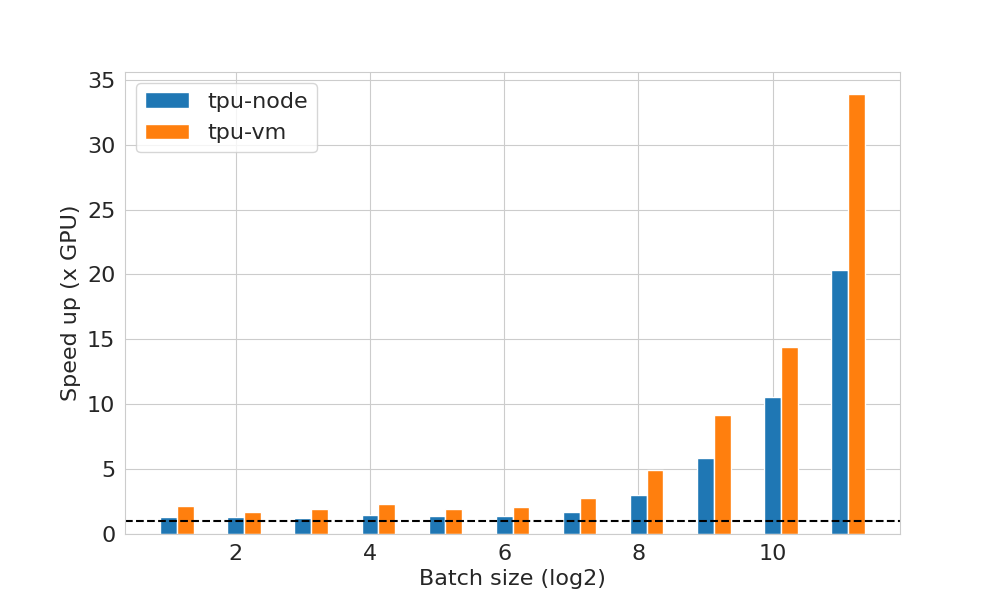

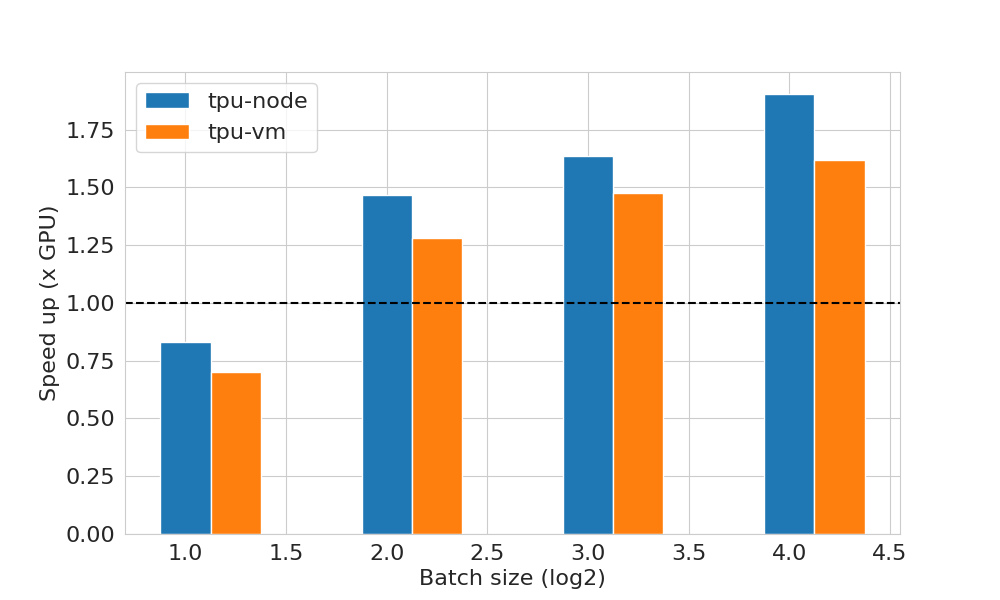

First, I will introduce the results of a throughput comparison without data IO. Deep learning training involves four steps: data IO, forward calculation, backward calculation, and parameter updates. In this experiment, we investigated the learning throughput, i.e., the number of samples that can be trained per unit time for the three steps excluding data IO.

We examined two models: the NAGA model and StyleGAN3. The NAGA model is the core model of DMV’s Mahjong AI NAGA, primarily using Feed Forward layers similar to GPT-3. StyleGAN3 is a Convolutional Neural Network (CNN)-based model used for image generation. Based on the official implementation of the proposed paper, we modified it to use basic PyTorch Ops instead of custom Ops, which are not supported by PyTorch XLA. Both models were implemented in PyTorch and migrated to the TPU environment.

The comparison GPU was a single RTX8000 (with CuDNN + CUDA) trained using PyTorch without special optimizations like Torch JIT. For the TPU, we used v3-8 (8 cores, equivalent to GPU devices) and used only one core for a fair comparison with a single GPU.

The above figures show the throughput ratio to the GPU at various batch sizes. The horizontal axis represents the batch size on a logarithmic scale (base 2), and the vertical axis shows how many more samples can be trained per unit time compared to the GPU. A throughput of 1 means the same speed as the GPU, while 2 means twice as fast.

For the NAGA model, speed improvement was observed at all batch sizes regardless of the TPU utilization method. The larger the batch size, the greater the speed improvement. TPU VM showed a higher speed improvement ratio compared to TPU Nodes. For batch size \(2^{11}\) (=2048), TPU-VM achieved a speed improvement ratio nearly 5 times higher than TPU Nodes compared to the GPU. This is likely due to gRPC communication becoming a bottleneck as the batch size increases.

In contrast, StyleGAN3 did not show significant speed improvement with increased batch size. This model involves more tensor operations than the NAGA model, such as doubling the resolution of feature maps before applying activation functions, which likely contributed to the limited speed improvement.

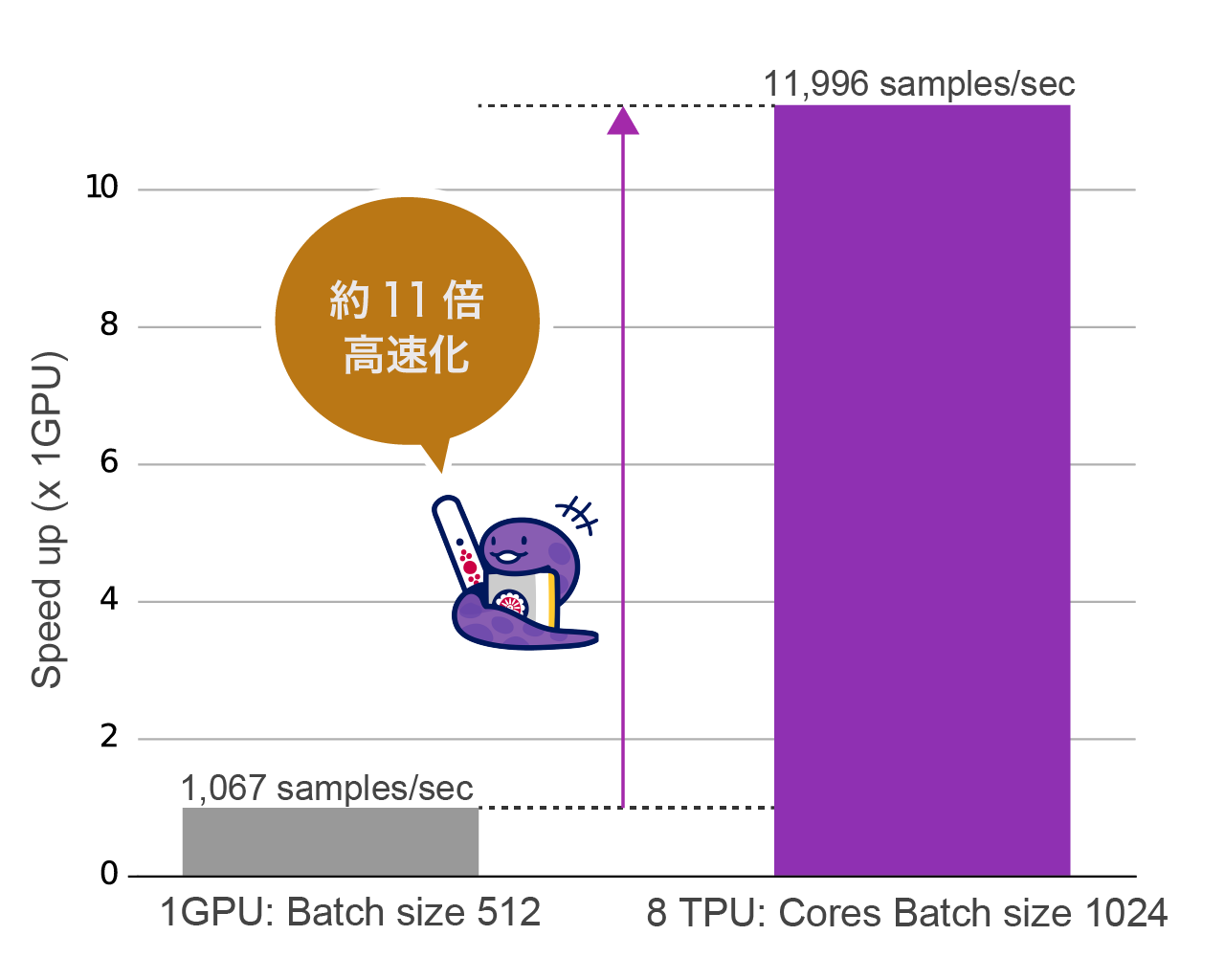

Based on the above results, we conducted training experiments including large-scale data IO with the NAGA model using TPU-VM. As shown in the previous experiment, TPUs can perform forward calculation to parameter updates faster than GPUs. As stated in Google’s official blog, data IO can become a bottleneck in such cases. Therefore, we distributed data IO by utilizing all 8 cores on a single TPU device in parallel. Specifically, we prepared a CPU process and model for each core to perform data IO and learning execution, conducting model parallel learning. Each core had a batch size of 1024, allowing 8192 samples to be trained in one learning step. The GPU side used a single GPU with a batch size of 512, as in the previous experiment.

The figure above shows the comparison of the number of samples trained per second, calculated from the time taken to complete the training. We achieved an 11.23x speed improvement over the GPU. Training the NAGA model, which took about 20-30 days on a GPU environment, was significantly shortened to a few days by migrating to TPU.

In addition to the speed improvement, we observed another beneficial effect. Increasing the batch size improved the independence of samples, stabilizing the training. When evaluating self-play in Mahjong using the trained model, the performance of the analysis engine significantly improved. This model is currently used in the new version of NAGA:

In this article, we introduced the results of our evaluation of speed improvement in deep learning using TPUs. The throughput comparison without data IO showed speed improvement depending on the batch size and model type. In the multi-TPU core experiment, we achieved approximately 11 times faster training compared to GPUs.

While TPUs can provide significant speed improvements, there are points to be aware of. When migrating GPU-based PyTorch implementations to TPUs, additional code is usually not needed, but XLA-incompatible operations must be avoided. Speed improvement may also vary depending on the model structure and batch size. If you want to utilize multiple TPU cores for speed improvement, further implementation modifications are necessary. Nevertheless, considering the potential to reduce weeks-long computations to just a few days, it is well worth exploring.

Google Cloud, Tensor Processing Unit, Colaboratory, and TensorFlow are trademarks of Google LLC.