This article is automatically translated.

This is Sasaki from Dwango Media Village. I would like to introduce the reinforcement learning framework RLlib, which I recently started using.

Deep reinforcement learning is one of the machine learning technologies that has been gaining attention recently and is actively researched. Unlike supervised learning, which requires a large amount of sample data, reinforcement learning requires experiential data obtained from the interaction between the environment and the agent. Therefore, efficiently collecting interaction experience data is crucial. By parallelizing the process of collecting experience data among process nodes, the collection efficiency can be increased, but performance can vary greatly depending on the implementation. Moreover, with new learning and exploration methods being proposed daily, implementing experimental programs supporting the latest learning methods from scratch is a significant task.

That’s why we decided to use RLlib [Liang et al., 2018] as a framework for experiments. It implements representative methods, including Ape-X, an algorithm for fast-converging Q-learning [Horgan et al., 2018]. Another reason is the high number of GitHub stars, indicating that many people seem to be using it.

Although RLlib is convenient, it supports various algorithms, resulting in many configuration items, and the documentation explanations are minimal. Therefore, in this article, I will explain the learning configuration items of RLlib using Ape-X as an example. First, I will review the overview of Ape-X and RLlib as an introduction. Then, I will explain how to start learning with RLlib as a simple usage example. Finally, I will explain the configuration items for learning, focusing on those related to Ape-X.

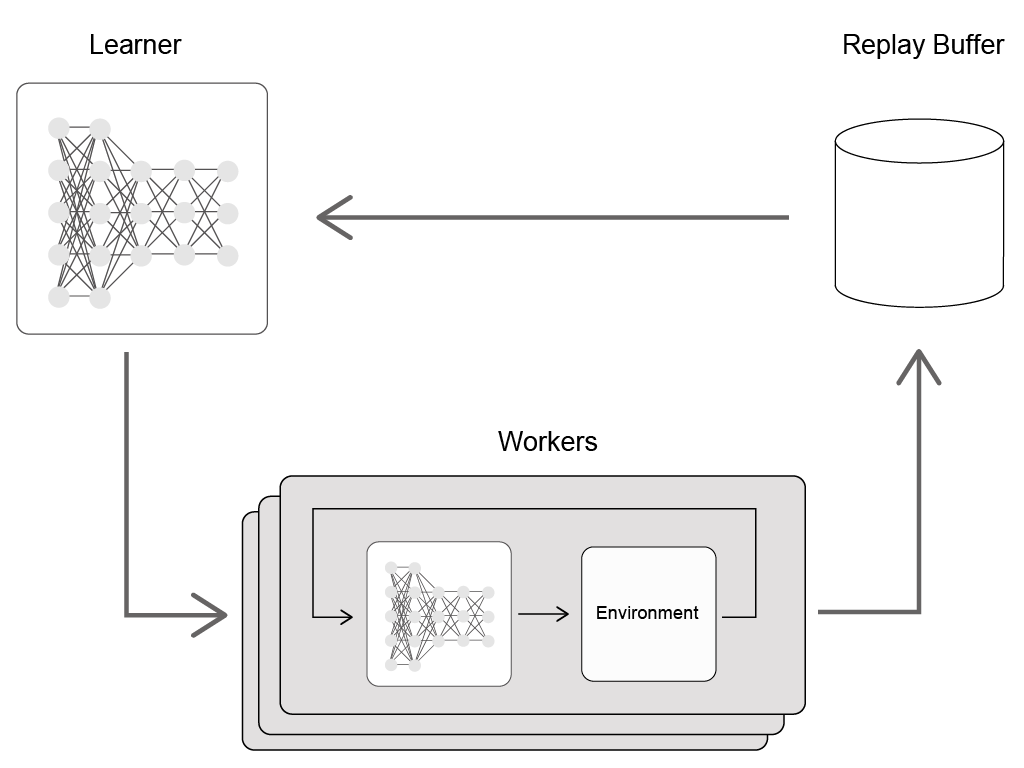

Ape-X is a type of DQN proposed in the paper Distributed Prioritized Experience Replay [Horgan et al., 2018].

Ape-X combines methods proposed in the past to improve the performance of DQN and introduces distributed learning. It runs multiple exploration workers in parallel, stores the obtained experience data in a replay buffer, and updates the Q-Network parameters in bulk using the GPU. The batch input used for gradient calculation is selected from the replay buffer based on the priority derived from the TD error. Specifically, it introduces the following three methods:

RLlib is a subpackage of the distributed execution library Ray in Python and can be used in combination with other subpackages, Tune, when conducting learning experiments.

Ray is a library for asynchronously executing functions executed via immutable objects shared between process nodes. When a specially decorated Python function foo is defined, foo.remote() becomes callable. When foo.remote() is executed, the function runs, but the value of the object is not immediately evaluated; instead, the execution is scheduled by the scheduler. The function returns the unique ID of the shared object. When this ID is passed to ray.get(), the computation is executed.

The scheduler for executing multiple computation processes can be started with ray.init(). Conducting learning with RLlib corresponds to scheduling computations with the scheduler. It is possible to start a new server each time or register the computation execution with a running server via the network.

Tune is a library for conducting and visualizing learning experiments and hyperparameter estimation. It implements functions to combine multiple learning experiments with meta inference algorithms for parameter exploration. Learning experiments are managed as Experiments, queued, and executed sequentially.

For this example, we assume conducting a single reinforcement learning experiment, similar to the learning sample script in RLlib.

To conduct a learning experiment, simply pass a dictionary describing the experiment to ray.tune.tune.run_experiments(). The dictionary contains the following:

~/ray_results.The run parameter needs to specify the name of an algorithm, such as A3C or IMPALA. See the RLlib page for details. For reinforcement learning, an Agent class corresponding to the name is initialized, and the main function _train() is called multiple times.

Ape-X is implemented in RLlib as a special case of DQN. The APEXAgent class overrides the DQNAgent class, and the main parameters are described in the ray source along with their default settings. If you want to configure settings other than the default, you can add a dictionary to the config in the above Experiment settings. Recommended config settings with results are also published.

To customize learning, you need to modify the config. Below is an explanation of its items.

Since there are many items, they are categorized for explanation. Note that the categories do not correspond to the implementation.

Workers refer to the processes performing exploration in parallel. They need to be configured according to the machine’s resources (CPU, GPU) where learning is conducted. The number of parallel workers is num_workers x num_envs_per_worker.

| Name | Type | Description |

|---|---|---|

| num_workers | int | Number of workers |

| num_cpus_per_worker | int | Number of CPUs allocated per worker |

| num_gpus_per_worker | int | Number of GPUs allocated per worker |

| num_envs_per_worker | int | Number of environments per worker |

In Ape-X, workers use the ε-greedy algorithm to interact (rollout) with the environment and generate experience data. The value of ε changes from 1.0 to exploration_final_eps as learning progresses, changing at a rate of exploration_fraction per schedule_max_timesteps steps. Specifically, the new value of \(\epsilon\), \(\epsilon_{i+1}\), is determined as follows:

where \(\epsilon_{final}\) and \(\epsilon_{0}\) are exploration_final_eps and the initial value of \(\epsilon\) (=1.0), respectively.

| Name | Type | Description |

|---|---|---|

| per_worker_exploration | bool | Whether to vary the value of ε for each worker |

| exploration_fraction | float | Size of reduction in ε |

| horizon | int/null | Forcibly stops rollout after a specific number of steps |

| learning_starts | int | Number of steps of rollout before starting learning |

| sample_async | bool | Whether to perform rollout asynchronously |

| sample_batch_size | int | Number of steps per batch sent to the replay buffer |

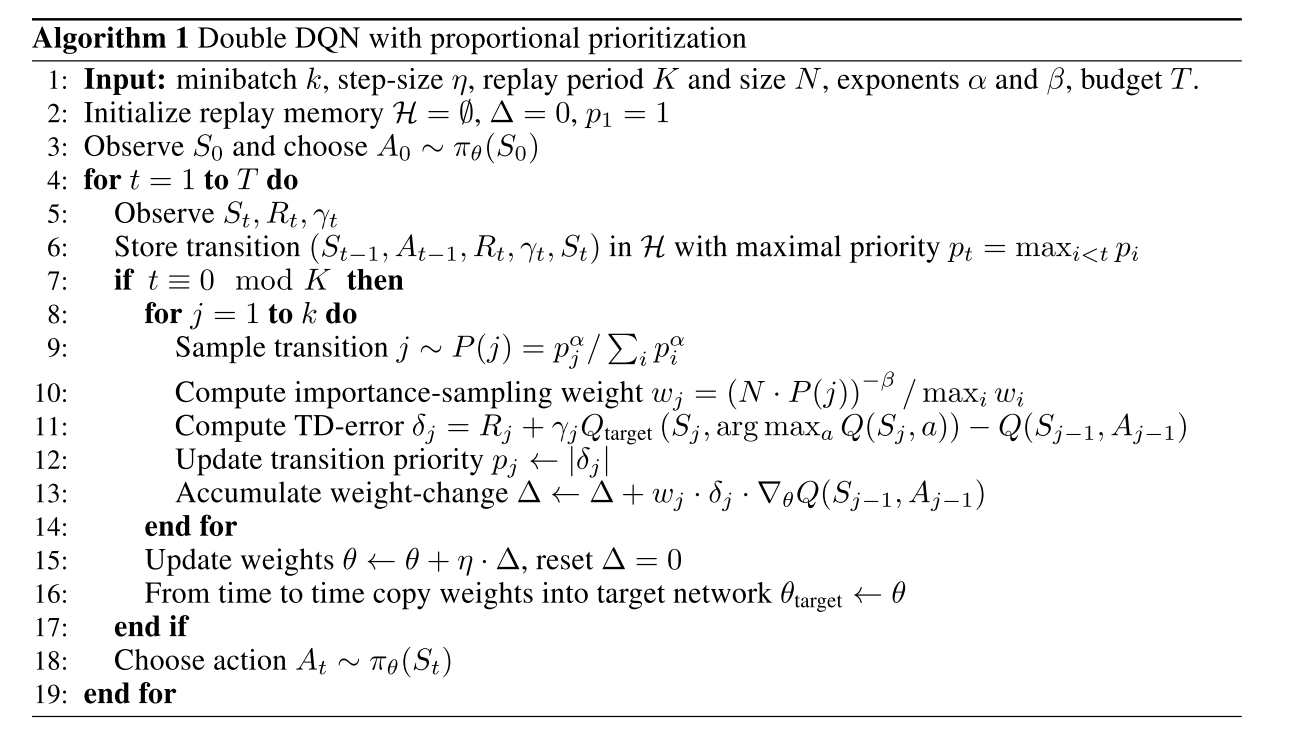

Prioritized Experience Replay [Schaul et al., 2016] assigns priorities to experience data in the replay buffer based on their usefulness for Q-learning, and learns more from high-priority experiences. The priority is determined according to the following algorithm:

In line 9, \(p_i\) is the probability for determining priority, which is the absolute value of the TD error plus a constant.

\[ p_i=|\delta_i|+\epsilon \]Using only the TD error to create the probability distribution would result in certain experiences being repeatedly used. Therefore, the distribution \(p_i\) is annealed using \(\alpha\) and \(\beta\). \(\beta\) specifically increases from prioritized_replay_beta to final_prioritized_replay_beta, similar to \(\epsilon\).

| Name | Type | Description |

|---|---|---|

| buffer_size | int | Size of the buffer |

| prioritized_replay | bool | Whether to use prioritized experience replay |

worker_side_prioritization | bool | Whether workers prioritize experiences during rollout | | prioritized_replay_eps | float | Constant added to the TD error when calculating priority probability | | prioritized_replay_alpha | float | Constant added to the TD error when calculating priority probability | | beta_annealing_fraction | float | Amount of change in \(\beta\) | | prioritized_replay_beta | float | Initial value of \(\beta\) | | final_prioritized_replay_beta | float | Upper limit value of \(\beta\) | | compress_observations | bool | Whether to compress observations using LZ4 when storing them in the replay buffer |

Data processing from the environment is outlined on the Ray page. The preprocessor acts as a wrapper for the environment (Gym.Env) and mainly handles preprocessing of observation data. The following four preprocessors are applied for atari environments (unless custom_preprocessor is specified):

env.reset() is called.| Name | Type | Description |

|---|---|---|

| preprocessor_pref | string | Method of preprocessing observations |

| observation_filter | string | Processing performed after the preprocessor. The default is NoFilter, which does nothing. |

| synchronize_filters | bool | Whether to synchronize filter parameters per iteration |

| clip_rewards | bool | Whether to limit the reward for each step to \([-1, 1]\). If true, numpy.sign() is used. |

| compress_observations | bool | Whether to compress observations using LZ4 when storing them in the replay buffer |

One iteration corresponds to the following steps. Note that checkpoint_freq is based on this iteration unit.

timesteps_iteration steps pass or min_iter_time_s seconds pass, the following is repeated:Data addition and replay in the replay buffer are performed asynchronously.

| Name | Type | Description |

|---|---|---|

| double_q | bool | Whether to apply Double Q-Learning |

| dueling | bool | Whether to apply Dueling Network |

| gamma | float | Discount rate for rewards |

| n_step | int | Value of n in n-step Q-Learning |

| target_network_update_freq | int | Number of steps per Q-network update |

| timesteps_per_iteration | int | Step interval of iterations |

| min_iter_time_s | int | Minimum number of seconds per iteration |

| train_batch_size | int | Size of the batch for Q-Network |

Forward and backward computations of the Q-Network are performed using Tensorflow or PyTorch. The structure of the Q-Network can be written by yourself, but it automatically determines the structure according to the observation_space of the environment. Especially for images, a CNN is applied, and you can overwrite the size of the convolution kernel through settings.

| Name | Type | Description |

|---|---|---|

| hiddens | int | Size of the fully-connected layer after the convolutional layers |

| noisy | bool | Whether to apply Noisy Network [Fortunato et al., 2017]. If true, ε-greedy is not used. |

| sigma0 | float | Initial parameter of the Noisy Network |

| num_atoms | int | Number of output distributions of the Q-network. If greater than 1, it becomes distributional Q-learning [Bellemare and Dabney, 2017]. |

| v_min | float | Parameter of distributional Q-learning |

| v_max | float | Parameter of distributional Q-learning |

| Name | Type | Description |

|---|---|---|

| optimizer_class | string | Type of optimizer. Use AsyncReplayOptimizer for Ape-X. |

| debug | bool | Toggle debug mode |

| max_weight_sync_delay | int | Minimum number of steps to wait since the last model parameter update |

| num_replay_buffer_shards | int | Parallelism of replay |

| lr | float | Learning rate |

| adam_epsilon | float | ε value of Adam Optimizer |

| grad_norm_clipping | float | Maximum value of the gradient norm |

batch_mode can be either truncate_episodes or complete_episodes. If complete_episodes, it does not send to the buffer until the episode ends.

| Name | Type | Description |

|---|---|---|

| schedule_max_timesteps | int | Step interval for changing hyperparameters based on learning progress |

| gpu | bool | Whether to use GPU |

| gpu_fraction | float | GPU usage rate (0~1, where 1 is 100%) |

| monitor | bool | Whether to save rollout results periodically as videos |

| batch_mode | string | Batch sampling method. |

| tf_session_args | dict | Parameters when initializing Tensorflow Session |

tf_session_args can be set as follows. See the Tensorflow documentation for details.

[horgan2018] [Horgan et al., 2018] Horgan, D., Quan, J., Budden, D., Barth-Maron, G., Hessel, M., van Hasselt, H., & Silver, D. (2018). Distributed Prioritized Experience Replay. In International Conference on Learning Representations (pp. 1–19). https://doi.org/10.1007/11564096

[liang2018] [Liang et al., 2018] Liang, E., Liaw, R., Moritz, P., Nishihara, R., Fox, R., Goldberg, K., … Stoica, I. (2018). RLlib: Abstractions for Distributed Reinforcement Learning. In International Conference on Machine Learning. https://arxiv.org/abs/1712.09381

[scaul2016] [Schaul et al., 2016] Schaul, T., Quan, J., Antonoglou, I., & Silver, D. (2016). Prioritized Experience Replay. In International Conference on Learning Representations (pp. 1–21). http://arxiv.org/abs/1511.05952

[lafferty2010] [Lafferty et al., 2010] Lafferty, J. D., Williams, C. K. I., Shawe-Taylor, J., Zemel, R. S., & Culotta, A. (2010). Double Q-learning. Advances in Neural Information Processing Systems, 23, 2613--2621.

[wang2016] [Wang et al., 2016] Wang, Z., Schaul, T., Hessel, M., & Lanctot, M. (2016). Dueling Network Architectures for Deep Reinforcement Learning Hado van Hasselt. In International Conference on Machine Learning (Vol. 48, pp. 1995–2003). https://doi.org/10.1109/MCOM.2016.7378425

[fortunato2017] [Fortunato et al., 2017] Fortunato, M., Azar, M. G., Piot, B., Menick, J., Osband, I., Graves, A., … Legg, S. (2018). Noisy Networks for Exploration. In International Conference on Learning Representations. https://arxiv.org/abs/1706.10295

[bellemare2017] [Bellemare and Dabney, 2017] Bellemare, M. G., & Dabney, W. (2017). A Distributional Perspective on Reinforcement Learning. In International Conference on Machine Learning. https://arxiv.org/abs/1707.06887