Recent studies have reported that distributed and asynchronous architecture designs can improve the sampling efficiency of deep \(Q\)-networks (DQN). Even though several DQN implementations have been published open-source, very few of them support distributed and asynchronous learning. As such, we have released MarltasCore, a DQN implementation that supports distributed and asynchronous training. MarltasCore also supports advanced techniques such as episodic memory and curiosity-driven rewards using random network distillation. The repository is available as an open source project on GitHub. In this article, we describe the design of MarltasCore, the algorithms it implements, and its performance on an Atari game task.

Deep \(Q\)-network (DQN, [Mnih et al. 2015]) is a machine learning approach for creating sequential decision-making systems. Recently a DQN agent outperformed the standard human benchmark on 57 Atari games. These DQN agents can be used in place of humans in otherwise time-consuming tasks, such as debugging a game or testing a recommendation system.

The success of DQN algorithms is thanks not only to their improved methods for approximating action values, but also to effective data sampling techniques. \(Q\)-learning is an online learning method, which means it requires an agent that interacts with an environment to generate training examples. To obtain the large number and variety of training examples required for accurate approximation, multiple asynchronous data sampling processes can be run alongside the optimization of the \(Q\)-network itself. Furthermore, distributing these processes across multiple machines (nodes) makes it feasible to sample data from more computationally-expensive environments, such as robot simulations, modern video games, or web browsers.

There are a few single-node asynchronous DQN implementations, such as CleanRL, and rlpyt. However, open-source multi-node implementations are very rare, with RLlib being one of the few examples. This is probably due to the high development cost of such libraries.

Therefore, we have released MarltasCore, an open-source DQN implementation that supports distributed asynchronous data sampling across multiple nodes. The above figure shows how sampling efficiency scales with the number of data sampling processes. The X and Y axes correspond to the number of processes and the number of environment steps per second across all actors, respectively.

MarltasCore is composed of multiple intercommunicating modules, as depicted in the animation above. The performance gains of parallelization in a single process are limited by Python’s Global Interpreter Lock, so most modules are executed in their own Python processes, forked using Multiprocessing. Modules send data to each other using gRPC, which is a Remote Procedure Call framework. gRPC is built on HTTP and allows DQN parameters and sampled data to be shared between modules across multiple machines, without requiring an application-specific messaging backend. We chose PyTorch as our neural network training framework.

Below are high-level descriptions of the modules:

| Algorithm | Ape-X | R2D2 | Never Give Up | Agent57 | MarltasCore |

|---|---|---|---|---|---|

| RNN-DQN | x | x | x | x | |

| Prioritized Experience Replay | x | x | x | x | x |

| Double DQN | x | x | x | x | x |

| Dueling Network | x | x | x | x | x |

| Retrace | x | x | |||

| Random network distillation | x | x | x | ||

| Episodic Curiosity | x | x | x | ||

| Meta-Controller | x | x |

Distributed asynchronous DQN has been continuously developed in researches which begins with Ape-X. MarltasCore follows the algorithms proposed in these studies, especially focusing on use of asynchronous learning and intrinsic reward computations.

Ape-X [Horgan et al. 2018] proposed the use of a distributed asynchronous architecture to train a DQN. It uses a convolutional neural network (CNN) model with a dueling architecture [Wang et al. 2016], which is trained using Double \(Q\)-Learning [Lafferty et al. 2010] and \(n\)-step \(Q\)-learning method [Mnih et al. 2016].

After Ape-X, R2D2 [Kapturoski et al. 2019] proposed using a recurrent neural network (RNN) in place of the CNN that Ape-X had used for its DQN architecture. They investigated two methods for initializing the RNN hidden state during training: “burn-in”, which warms up the hidden state by first running a few steps of forward propagation; and “stored state”, which reuses the hidden state generated during data sampling. MarltasCore implements the stored state method.

Never Give Up [Badia et al. 2020a] proposed an intrinsic reward which measures the novelty of observations. They introduced two kinds of modules for computing intrinsic reward: the “life-long novelty module” and the “episodic novelty module.” The former utilizes Random Network Distillation (RND, [Burda et al. 2018]), which feeds each observation to a randomly initialized CNN and another CNN called the predictor network. The predictor network is trained to predict the output features of the randomly initialized CNN, and the distance between the predicted and actual features is used as the intrinsic reward. By distilling the randomly initialized network, the predictor network is able to store information about all past observations since the beginning of training, resulting in a life-long novelty reward. The latter intrinsic reward module also stores information about past observations and compares them to the current observation, but that memory is cleared after each episode. In the NGU paper, the authors also investigated the use of Retrace [Munos et al. 2016], which is an importance sampling method that helps alleviate the discrepancy between the agent’s policy during sampling and replay. MarltasCore supports both of the above intrinsic reward modules, but not Retrace.

Agent57 [Badia et al. 2020b] focuses on the exploration and exploitation dilemma. The NGU agent combined extrinsic (from the environment) and intrinsic rewards using a fixed ratio that controls the amount of exploration. Agent57 proposed utilizing sliding-window UCB [Moulines et al. 1985] to select the optimal ratio from a set of candidates. MarltasCore implements the UCB1-tuned algorithm [Kuleshov et al. 2014], but with the training method of sliding-window UCB applied.

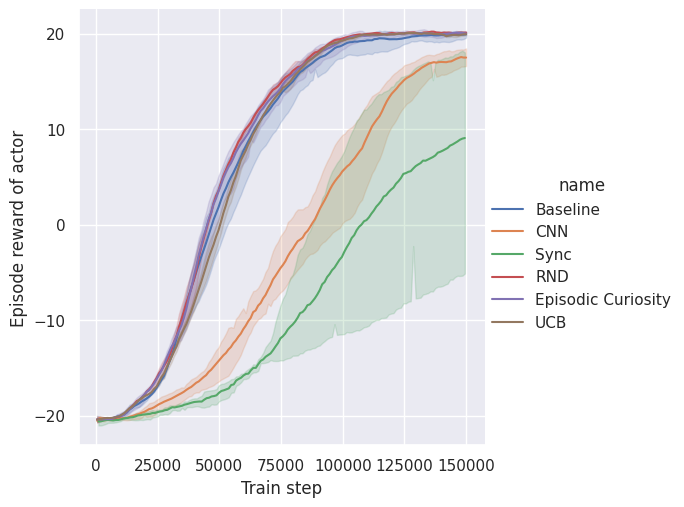

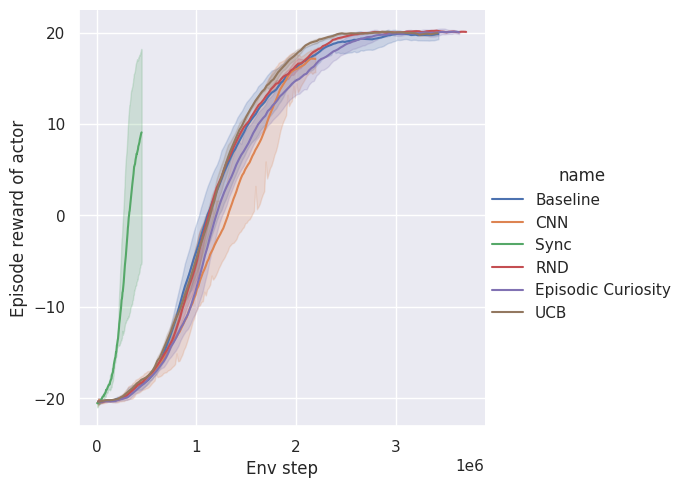

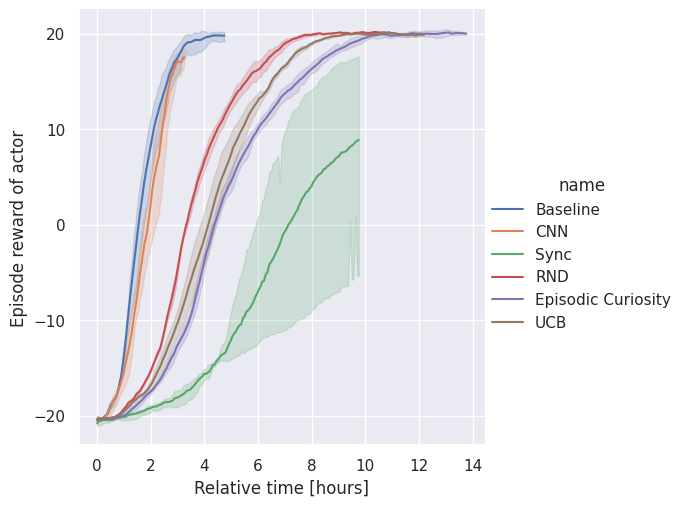

We verified the performance of MarltasCore through training several DQN variants with different settings. Each agent is trained three times with different random seeds. The task is Pong, which is one of the Atari benchmark games. The following is the list of examined agents:

The figures show the moving average of the total non-discounted episode reward. Each subplot shows the same data plotted against a different X-axis: “learning step” (number of DQN updates in the Learner, (a)), “env step” (total number of environment steps in the Actors, (b)), and “relative time” since the start of training (c). Note that all the experiments are conducted using the same computational budget.

The baseline and its variants with additional intrinsic reward modules achieved total episode rewards of close to 21, which is the maximum for the game, whereas the Sync and CNN agents could not achieve that reward even after the maximum number of training steps. We were unable to observe any benefits of the intrinsic reward modules in the Pong experiments. We think Pong is too easy to reveal any differences between exploration strategies.

When the episode reward data is plotted in terms of env step, the Sync agent appears to perform the best. However, its growth is much slower than the others in real time. The CNN and Baseline agents are faster than the RND, Episodic Curiosity, and UCB agents. This is because we adopt a split \(Q\)-network architecture, which requires twice as many forward- and backward-propagations to compute the extrinsic and intrinsic action values. As a result, asynchronous learning brings faster convergence in real time, but consumes more environment steps. On the other hand, synchronous learning shows better sample complexity, at the cost of computation time.

In this article, we introduced a DQN implementation named MarltasCore. MarltasCore supports distributed asynchronous learning, a key technique for efficient data sampling. This learning method is also crucial for sampling data from computationally expensive environments. These distributed and asynchronous computations are made possible by a decentralized architecture design and HTTP communication via gRPC. Several recent DQN techniques are implemented as part of MarltasCore, including intrinsic rewards.

As we reported in the Pong experiments, the performance of DQN agents can be affected not only by choice of algorithm or hyperparameters, but also by the training framework’s implementation. We hope this project will help reinforcement learning researchers and developers who conduct DQN experiments.

[DQN] Mnih et al. 2015. Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel Veness, Marc G. Bellemare, Alex Graves, et al. 'Human-Level Control through Deep Reinforcement Learning.' Nature 518, no. 7540 (February 26, 2015): 529–33.

[Ape-X] Horgan et al. 2018. Dan Horgan, John Quan, David Budden, Gabriel Barth-Maron, Matteo Hessel, Hado van Hasselt, and David Silver. 'Distributed Prioritized Experience Replay.' ICLR2018, 1–19. https://arxiv.org/abs/1803.00933

[R2D2] Kapturoski et al. 2019. Steven Kapturowski, Georg Ostrovski, John Quan, and Will Dabney. 'Recurrent Experience Replay in Distributed Reinforcement Learning.' ICLR2019, 1–19. https://openreview.net/forum?id=r1lyTjAqYX

[NGU] Badia et al. 2020a. Adrià Puigdomènech Badia, Pablo Sprechmann, Alex Vitvitskyi, Daniel Guo, Bilal Piot, Steven Kapturowski, Olivier Tieleman, Martin Arjovsky, Alexander Pritzel, Andrew Bolt, Charles Blundell. 'Never Give Up: Learning Directed Exploration Strategies,' ICLR2020. http://arxiv.org/abs/2002.06038

[Agent57] Badia et al. 2020b. Adrià Puigdomènech Badia, Bilal Piot, Steven Kapturowski, Pablo Sprechmann, Alex Vitvitskyi, Daniel Guo, and Charles Blundell. 'Agent57: Outperforming the Atari Human Benchmark,' 2020. http://arxiv.org/abs/2003.13350

[DoubleQ] Lafferty et al. 2010. Hado Hasselt. 'Double Q-Learning.' Advances in Neural Information Processing Systems 23: 2613-2621, 2010.

[Dueling] Wang et al. 2016. Ziyu Wang, Tom Schaul, Matteo Hessel, Hado Hasselt, Marc Lanctot, Nando Freitas. 'Dueling Network Architectures for Deep Reinforcement Learning.' International Conference on International Conference on Machine Learning, PMLR 48:1995-2003, 2016. https://arxiv.org/abs/1511.06581

[n-step] Mnih et al. 2016. Volodymyr Mnih, Adrià Puigdomènech Badia, Mehdi Mirza, Alex Graves, Tim Harley, Timothy P. Lillicrap, David Silver, and Koray Kavukcuoglu. 'Asynchronous Methods for Deep Reinforcement Learning,' In Proceedings of the 33rd International Conference on International Conference on Machine Learning - Volume 48 (ICML'16). JMLR.org, 1928–1937, 2016. https://arxiv.org/abs/1602.01783

[RND] Burda et al. 2018. Yuri Burda, Harrison Edwards, Amos Storkey, Oleg Klimov. 'Exploration by Random Network Distillation.' 7th International Conference on Learning Representations, ICLR2019, 1–17. https://arxiv.org/abs/1810.12894

[SlidingWindowUCB] Moulines et al. 1985. Aurélien Garivier, Eric Moulines. 'On Upper-Confidence Bound Policies for Non-Stationary Bandit Problems,' Lecture Notes in Computer Science, vol 6925. Springer, Berlin, Heidelberg. 2011. https://arxiv.org/abs/0805.3415

[UCB1-Tuned] Kuleshov et al. 2014. Kuleshov, Volodymyr, and Doina Precup. 'Algorithms for Multi-Armed Bandit Problems,' February 24, 2014. http://arxiv.org/abs/1402.6028

[Retrace] Munos et al. 2016. Remi Munos, Tom Stepleton, Anna Harutyunyan, and Marc Bellemare. 'Safe and Efficient Off-Policy Reinforcement Learning.' Advances in Neural Information Processing Systems, no. Nips (June 8, 2016): 1054–62. http://arxiv.org/abs/1606.02647