This article is automatically translated.

We introduce the system “Marltas” that learns how to play games posted on niconico’s game submission service RPG Atsumaru. The demo below shows the progress of Marltas learning the game “きまぐれバウンドボール”. The horizontal axis of the graph represents the number of learning steps, and the vertical axis represents the average reward. Clicking on the graph will play the gameplay video at the closest stage. Initially, Marltas barely returns the ball, but as learning progresses, it becomes better at not dropping the ball.

Video game AI has been a field of artificial intelligence research involving various studies such as designing COM player behavior and automating debugging in game development. Recently, with the focus on reinforcement learning, not only old arcade games like ATARI [1] but also complex games like StarCraft II have been handled. There have been reports of AI competing against and even surpassing top-level human players [2].

Marltas is positioned as one of the video game AIs. It learns how to play games posted on RPG Atsumaru using a method called reinforcement learning. At the beginning of the learning process, it knows nothing about how to operate the game and quickly gets game over, but as it gains experience, it gradually improves and achieves higher scores. We aim to provide a more enjoyable experience of RPG Atsumaru games using Marltas. For example, creating automatic play videos of posted games to introduce their fun. Also, analyzing the results of automatic play to provide feedback to the creators.

On Wednesday, August 28, 2019, the first live broadcast of Marltas’ learning experiment will be held. This broadcast will deliver a 24-hour live broadcast showing how Marltas actually learns and improves.

There are various approaches to “artificial intelligence.” Traditional game AI often uses rule-based methods involving condition branching rules. Since video games are created by humans, the rules governing what happens with certain operations are internally described. If you understand the game’s logic, you can likely describe rules for “good” game operations. However, in reality, it becomes difficult to describe rules when the game logic is complex or includes randomness. Therefore, instead of having human designers describe the rules, an approach using machine learning is considered, where the system automatically acquires the rules by using experience data obtained from actual game operations.

Unlike real-world problems, video games allow repeated trials under the same conditions. Therefore, in the case of video games, methods called online learning are often used within machine learning. Online learning is a method where the system’s performance is continuously improved by evaluating the target while using it. In other words, it repeatedly plays the game, using the experiences gained to update the system. Videos showing attempts to clear games using genetic algorithms and decision trees have also been posted on niconico.

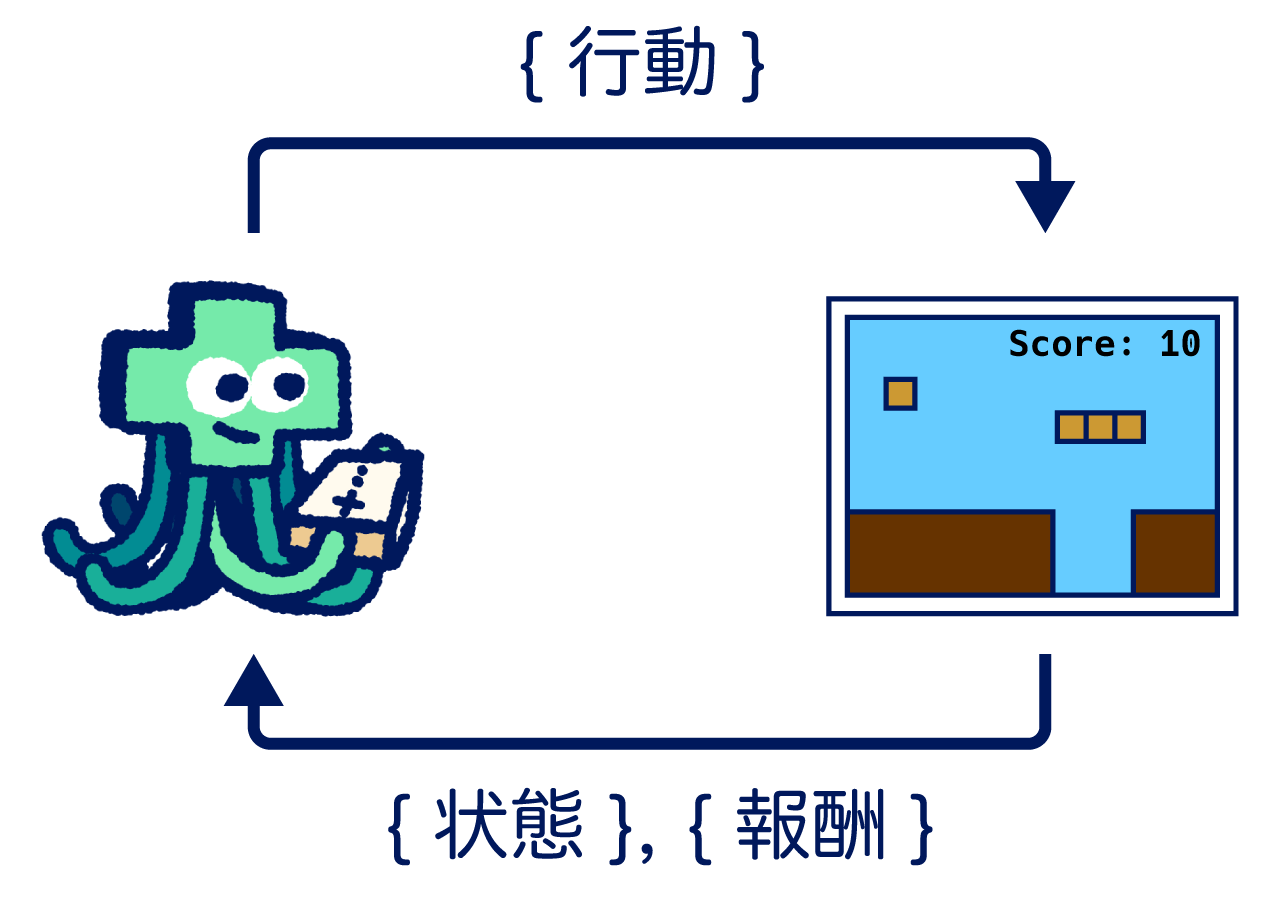

Marltas adopts a type of online learning called reinforcement learning. Reinforcement learning is a method for optimizing how to operate a target environment (in this case, a game) through actions. The environment has some state, and the state changes sequentially through actions. The entity selecting actions based on the state is called an agent, and the method of selecting actions is called a policy. The agent receives a new state and an indicator showing the goodness of the state transition called reward along with the state transition. The reward’s determination is up to the designer. The agent starts from some initial state, selects actions, receives new states from the environment, and selects actions again. This series of actions, states, and rewards generates sequence data. The goal of reinforcement learning is to find a policy that maximizes the sum of rewards in this sequence data.

Let’s apply this reinforcement learning problem setup to Marltas. The target environment corresponds to the game itself. The environment state represents the play status data, and Marltas uses the game screen image. The action is pressing buttons, and the reward measuring the goodness of the game play (state transition) is the score. Therefore, Marltas receives the game screen image, plays the game from start to finish, and learns with the goal of maximizing the total score. The reward is the score difference between steps, and the sum of rewards in a series is designed to be the final score.

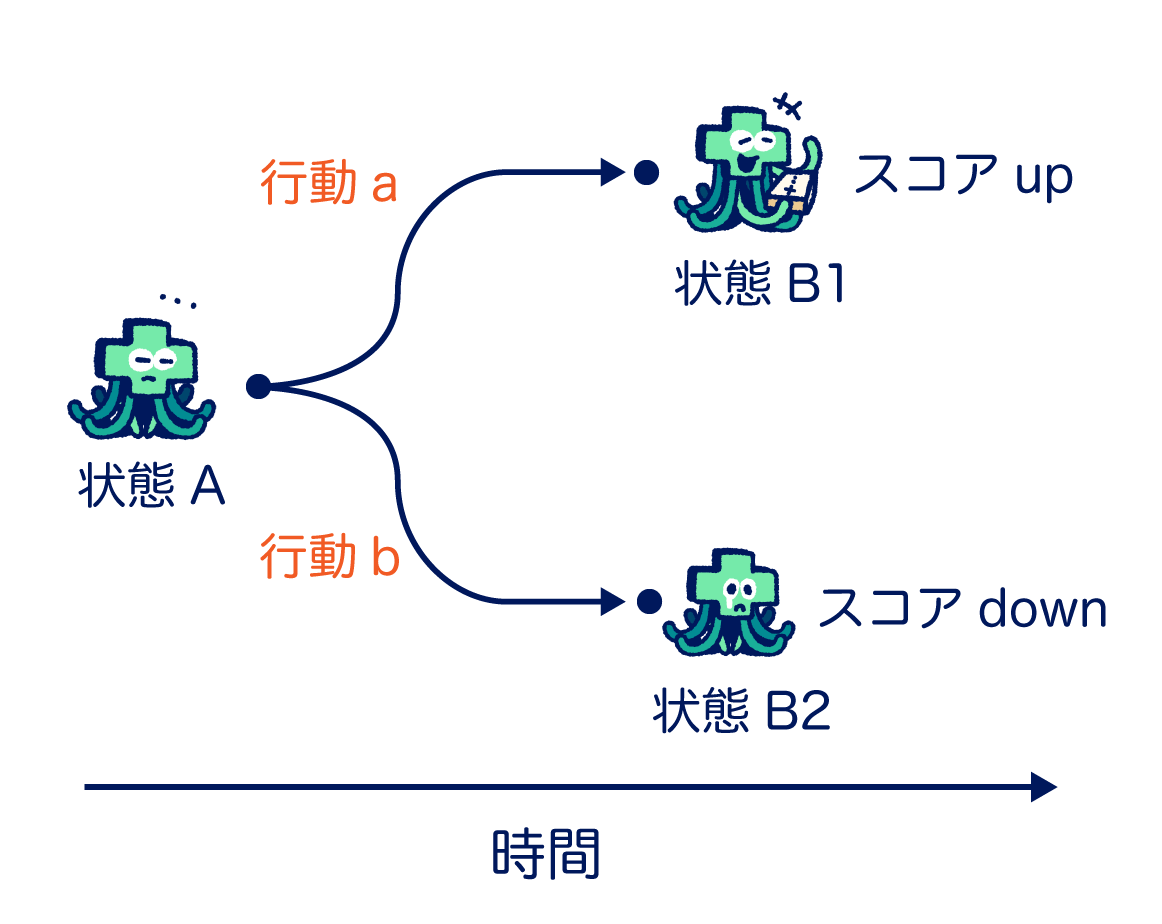

The above diagram illustrates a simple case where only one action is selected. Marltas sees some game screen (State A) and decides to press either button a or b. Depending on the pressed button, the game state changes to B1 or B2, and the score increases or decreases. In this example, selecting button a in State A is an effective policy as it increases the score.

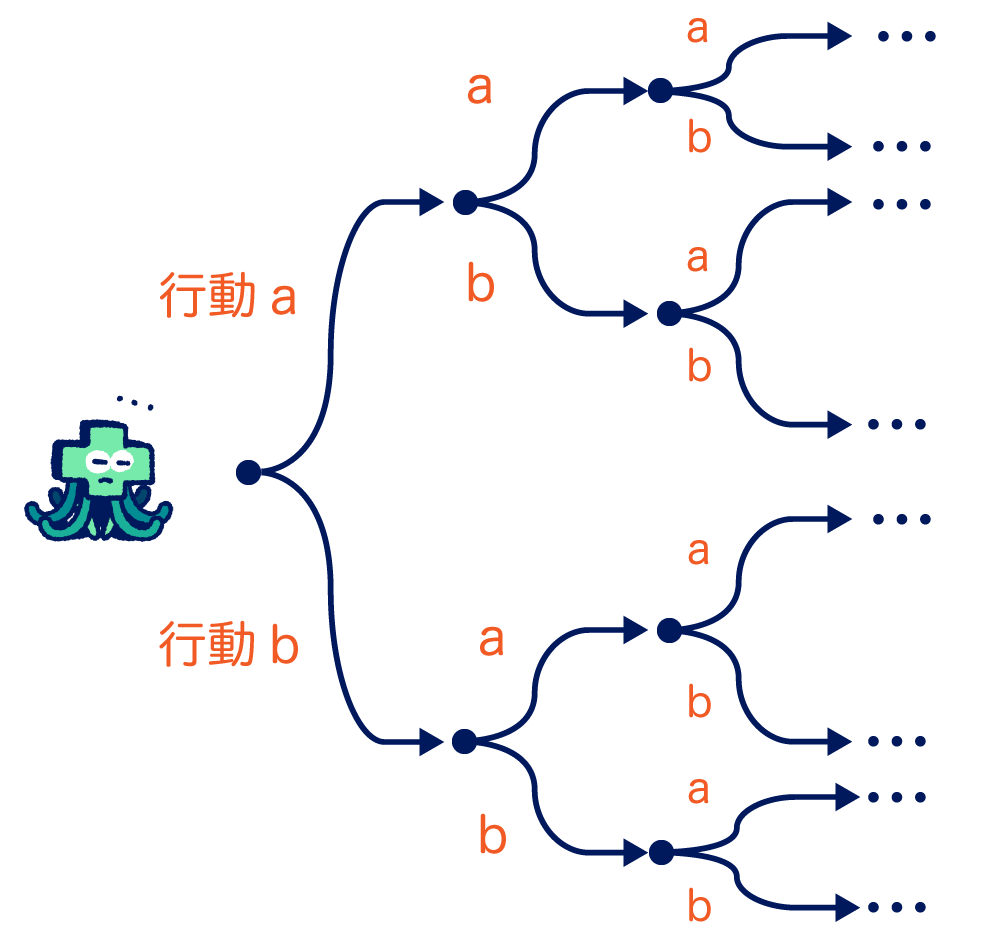

Now consider pressing buttons multiple times. In the above diagram, Marltas is in State A, but since it presses buttons multiple times, the future branches out. Even if the score increases immediately after pressing once, it may follow an unlucky path with little score gain afterward. Therefore, it considers the total future score obtained by selecting action a or b in State A. This total score is called action value or Q-value, and the function calculating Q-values is called Q-function. If the correct Q-function can be obtained, it can calculate the value of actions based on the game state, and it can continue to take the optimal action at each time.

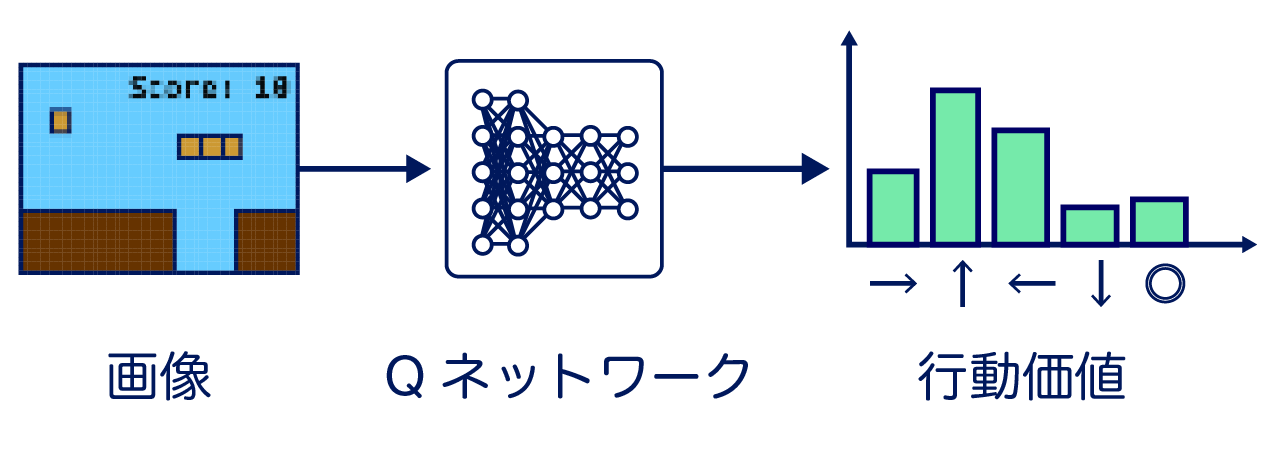

Marltas acquires the Q-function through a method called Deep Q-Learning [3]. The Q-function is initially unknown, but it is possible to create the optimal Q-function if all combinations of observations and actions can be counted. However, in practice, these combinations are enormous, and if observations are continuous values, it is virtually impossible. Therefore, Deep Q-Learning approximates the Q-function using neural networks. The Q-function approximated by the neural network is called a “Q-network.” The Q-network takes the state as input and outputs the action value. Learning is conducted to improve the estimation accuracy of the action value output using experience data obtained from actual gameplay.

The learning of the Q-network roughly follows these steps:

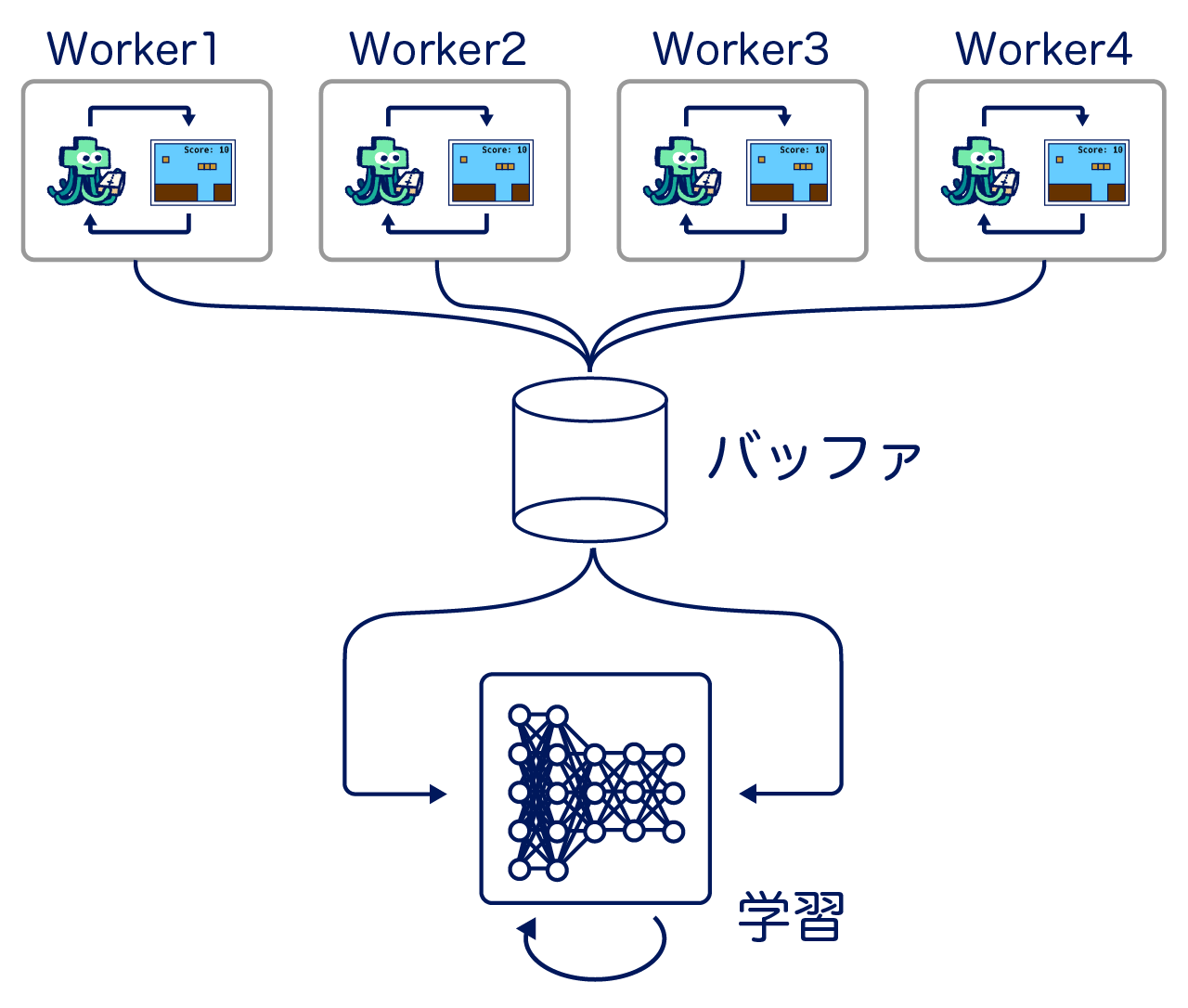

To optimize the Q-network, a large amount of pair data of observations, actions, and the resulting rewards is needed. This means actual gameplay is necessary. Generally, in reinforcement learning, the time to optimize the Q-network parameters takes longer than generating learning data. Therefore, parallel execution of multiple instances of the same game is considered to efficiently collect learning data. Specifically, as shown in the diagram above, multiple processes (called workers or actors) with Q-networks and games are executed in parallel and aggregated into one buffer. The learning of the Q-network is done in one process, sampling a certain number of data from the buffer as a batch and optimizing. Currently, Marltas follows a learning method called Ape-X [4], mainly using a library called RLlib [5] for implementation. Refer to this article for more details about this library.

We actually used Deep Q-Learning to learn eight different games from RPG Atsumaru. Since RPG Atsumaru is a browser game, we launched multiple browsers to capture game screens and used clicks and keyboard operations as actions. You can watch the gameplay videos during the learning process in this article. Please

play the same games yourself to see how well Marltas performs.

One of the findings from the experiment is that Marltas cannot master all games; there are games it is good and bad at. For example, it tends to excel in action games that require relatively quick and accurate operations. On the other hand, it struggles with games that require long-term planning. Planning is known as a difficult problem in reinforcement learning in general, mainly because it is challenging to estimate the value accurately when rewards reflect the actions taken after a delay.

This article introduced the system “Marltas” for automatically acquiring video game operations. We explained Marltas’ position in game AI and the overview of the learning algorithm Deep Q-Learning it uses.

Marltas is just born. We plan to add more features to enhance the gaming experience, such as human vs. AI matches and assistive play. Please continue to support Marltas!

[1] Bellemare, M. G., Naddaf, Y., Veness, J. and Bowling, M.: The Arcade Learning Environment: An Evaluation Platform for General Agents, Journal of Artificial Intelligence Research, Vol. 47, pp. 253–279 (2013).

[2] Vinyals, O., Babuschkin, I., Chung, J., Mathieu, M., Jaderberg, M., Czarnecki, W. M., Dudzik, A., Huang, A., Georgiev, P., Powell, R., Ewalds, T., Horgan, D., Kroiss, M., Danihelka, I., Agapiou, J., Oh, J., Dalibard, V., Choi, D., Sifre, L., Sulsky, Y., Vezhnevets, S., Molloy, J., Cai, T., Budden, D., Paine, T., Gulcehre, C., Wang, Z., Pfaff, T., Pohlen, T., Wu, Y., Yogatama, D., Cohen, J., McKinney, K., Smith, O., Schaul, T., Lillicrap, T., Apps, C., Kavukcuoglu, K., Hassabis, D. and Silver, D.: AlphaStar: Mastering the Real-Time Strategy Game StarCraft II, https://deepmind.com/blog/alphastar-mastering-real-time-strategy-game-starcraft-ii/ (2019).

[3] Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., Graves, A., Riedmiller, M., Fidjeland, A. K., Ostrovski, G., Petersen, S., Beattie, C., Sadik, A., Antonoglou, I., King, H., Kumaran, D., Wierstra, D., Legg, S. and Hassabis, D.: Human-level control through deep reinforcement learning, Nature, Vol. 518, No. 7540, pp. 529–533 (2015).

[4] Horgan, D., Quan, J., Budden, D., Barth-Maron, G., Hessel, M., van Hasselt, H. and Silver, D.: Distributed Prioritized Experience Replay, International Conference on Learning Representations, pp. 1–19 (2018).

[5] Liang, E., Liaw, R., Moritz, P., Nishihara, R., Fox, R., Goldberg, K., Gonzalez, J. E., Jordan, M. I. and Stoica, I.: RLlib: Abstractions for Distributed Reinforcement Learning, International Conference on Machine Learning (2017).