This article is automatically translated.

Hello, this is Kazuma Sasaki from Dwango Media Village (DMV). Following last year, DMV participated in the “22nd Symposium on Image Recognition and Understanding” MIRU2019. This time, we participated as presenters of research rather than exhibitors. In this article, I would like to introduce our presented research titled “Marltas: A Browser Game Execution Environment for Reinforcement Learning Using Headless Browsers.”

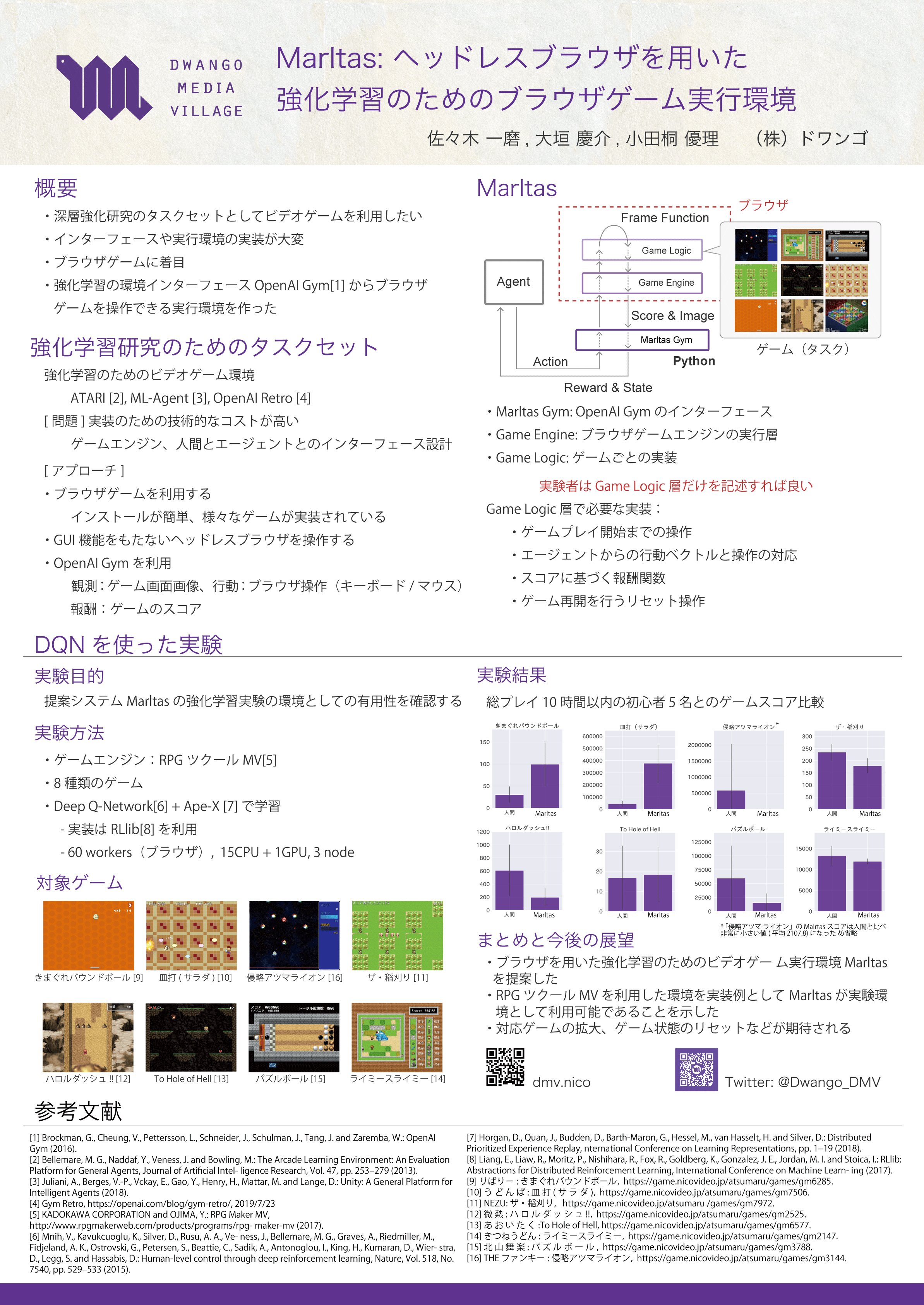

At this year’s MIRU, we gave a poster presentation titled “Marltas: A Browser Game Execution Environment for Reinforcement Learning Using Headless Browsers.” This research aims to propose a system for an experimental platform for reinforcement learning research.

The emergence of the Deep Q-Network (DQN), which directly handles images as observations with deep neural networks, has sparked recent reinforcement learning research. Reinforcement learning is a type of online learning where an agent with a policy interacts with the environment to generate data and learn. The environment is a system that transitions its state in response to the agent’s actions, and the agent selects actions based on the environment’s state. The agent receives a reward, a value that evaluates the “goodness” of state transitions, determined by a pre-designed reward function. Reinforcement learning aims to find the optimal policy that maximizes the expected value of this reward, with various policy optimization and learning methods proposed, including Q-learning.

Of course, an environment is necessary to evaluate the policy optimization method. In recent reinforcement learning research, video games are often used as environments because they are easy to simulate and design reward functions in the form of scores. Representative examples include ATARI’s game environment set ALE and Open AI Retro.

While video games are convenient as reinforcement learning tasks, their technical cost is high when used for experiments. To use them as reinforcement learning environments, additional functions such as execution environment setup and interfaces with agents need to be implemented.

Therefore, in this research, we focused on browser games and developed a game execution environment “Marltas” that can be used for reinforcement learning by operating the browser through the representative interface of reinforcement learning environments, OpenAI Gym. By using headless browsers (browsers without GUI functions) and implementing them separately from the game engine and game software, we designed it to minimize the implementation effort and easily prepare various environments.

To demonstrate that the proposed method is actually usable as a reinforcement learning platform, we implemented eight games from our browser game submission service “RPG Atsumaru” as examples. We conducted learning experiments and compared the scores achieved by DQN agents as a baseline with those of beginner human players.

Below are examples of gameplay videos at the beginning, middle, and end of the learning process. For detailed results and learning methods, please refer to the paper.

By using these games as subjects, we were able to conduct experiments with Marltas. We would like to thank the game authors. Thank you very much!

At the symposium, we presented our research with a poster. You can download the preprint version of the submitted paper from the link below. On the day of the presentation, attendees could watch videos of the trained DQN agents actually playing the games.

The symposium was even more lively compared to last year. Many presentations focused on deep learning, particularly image recognition and generation techniques using Convolutional Neural Networks. Notably, there were studies exploring interdisciplinary fields such as medical applications, robotics, pseudo-online learning, and the use of natural language, which opened up new problem settings. Additionally, many studies addressed practical application issues such as data scarcity, adversarial attacks, and explainability, highlighting the permeation of deep learning technologies. I am excited to see what new research will emerge next year.