This article is automatically translated.

As of May 2022, with the commercialization of the SeirenVoice series, the demo page has been discontinued.

Here is a video where the author’s voice has been recorded and converted, then combined with the original footage. The voice was recorded using a smartphone for filming, which means it includes room reverb and the recording environment makes the voice slightly unclear. However, you can see that the voice conversion still works well.

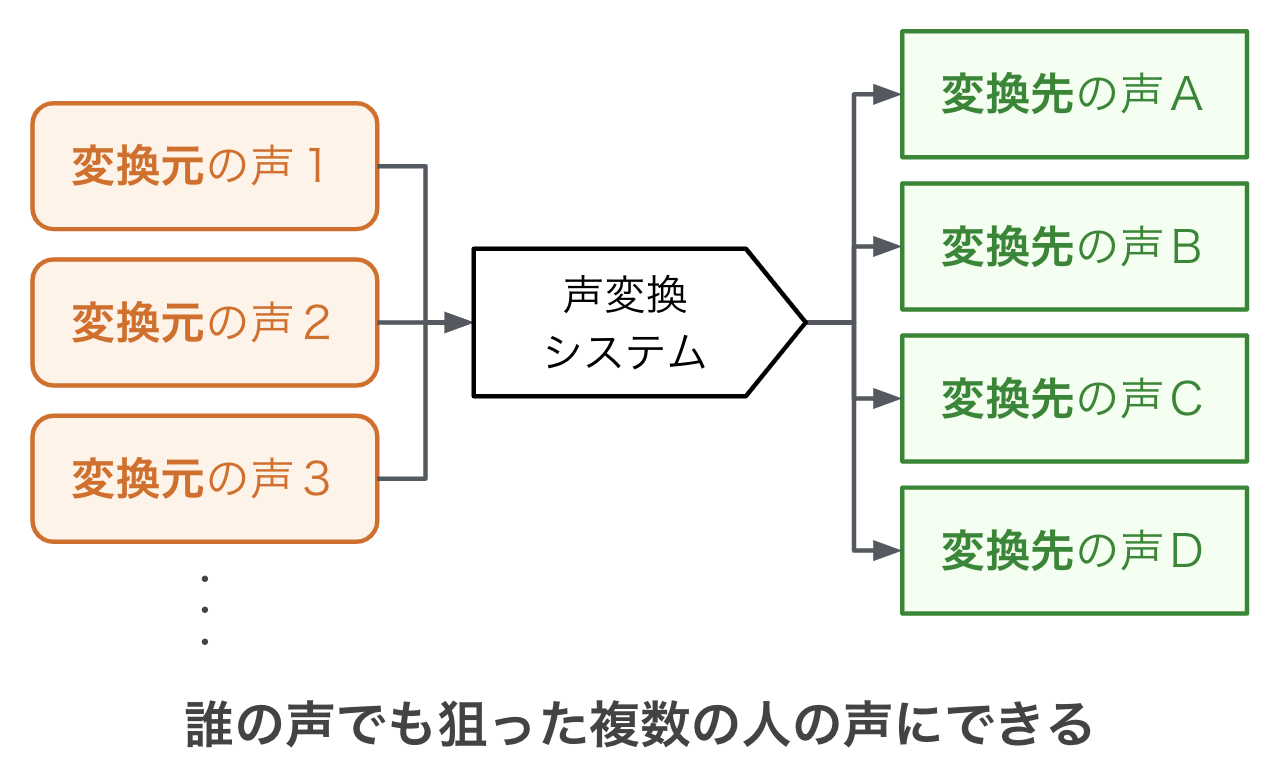

I’m Hiroshiba from Dwango Media Village. We have developed a voice conversion system that can transform anyone’s voice into the voices of multiple targeted people and have released a demo page where you can actually change your voice. In this article, I will introduce the challenges we faced while researching and developing voice conversion technology.

Voice conversion technology involves a trade-off between real-time performance and quality. Existing voice conversion systems tend to prioritize real-time performance, while those focusing on quality are less common. Believing that a quality-focused voice conversion system could expand applications, we took on the challenge of research and development.

The quality of voice synthesis, including voice conversion, has significantly improved in recent years due to advances in deep learning technology. Notably, the deep learning model WaveNet [1], which generates audio samples incrementally using an autoregressive method, has enabled the creation of audio that is almost indistinguishable from real speech. While WaveNet offers high-quality generation, it has the drawback of slow generation speed, leading to the development of models like WaveRNN [2] that address this issue. For our voice conversion system, we used WaveRNN to focus on quality.

However, there were challenges in achieving quality-focused voice conversion: “enabling voice conversion from anyone’s voice” and “converting to various people’s voices.”

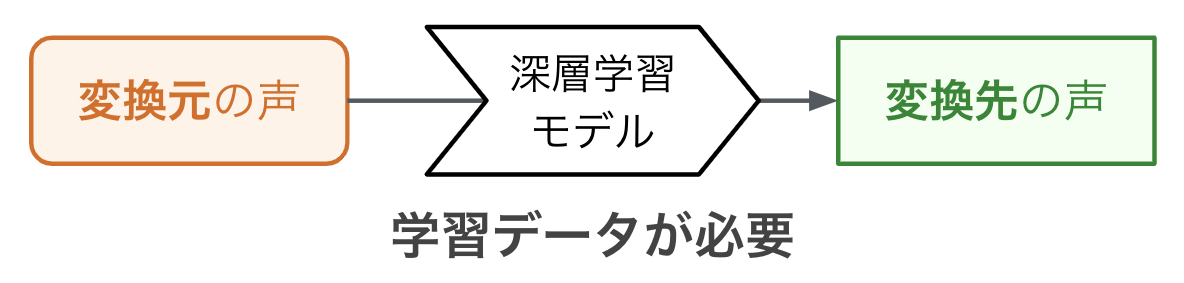

One approach to voice conversion using deep learning involves preparing paired data of the same sentences read by both the source and target individuals and using these pairs as training data. This method was also used in a previous study “Voice Quality Conversion Divided into Two Stages: Conversion and Refinement” [3]. However, this method has the drawback of requiring the source person to read multiple sentences and record them, followed by deep learning using the audio data, making it very labor-intensive. Therefore, we considered a method of voice conversion that does not require the source voice data or re-learning.

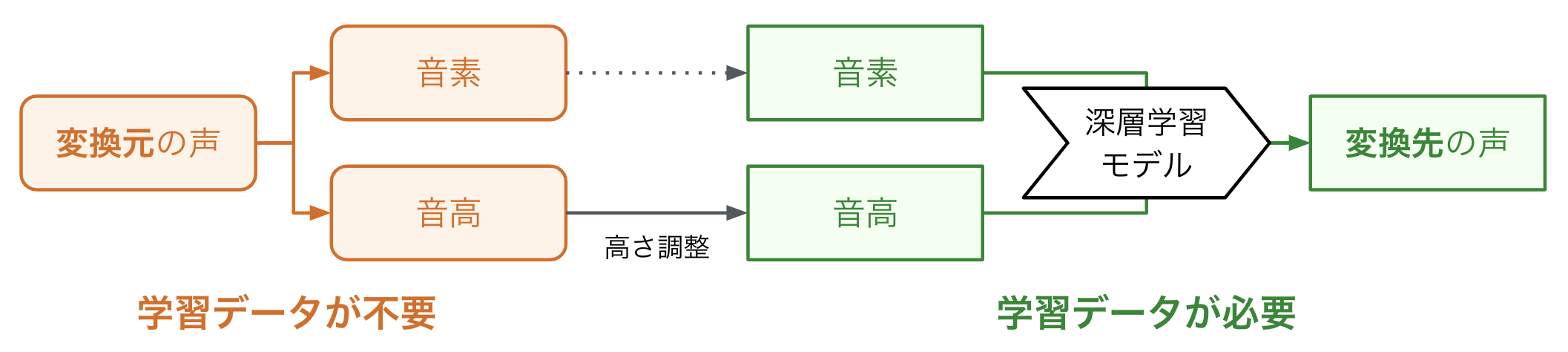

The need for source voice data in deep learning-based voice conversion arises because the problem is being tackled directly (End-to-End) using deep learning. We developed a method to avoid using source voice data by decomposing the audio data into speaker-independent elements and separating the processing for the source and target voices. We chose phonemes to represent linguistic information and pitch and pronunciation timing for non-linguistic information. During training, we used existing technology to extract these elements from the target audio data and created a deep learning model to synthesize the audio from the extracted elements. When converting a voice, we extracted phonemes and pitch from the source voice and synthesized the target voice using the deep learning model. A linear transformation was applied to align the pitch due to differences between the source and target pitches. This method allowed us to convert any voice to another using only phoneme and pitch synthesis. We used OpenJTalk for phoneme extraction and WORLD for pitch extraction.

There are several methods to synthesize voices of various people using deep learning. Beyond technical issues, obtaining large-scale, high-quality Japanese audio data was challenging. However, the emergence of the high-quality audio dataset “JVS Corpus” by 100 professional speakers [4] changed the situation, allowing us to experiment with converting Japanese audio data to 100 voices. Using the JVS Corpus resolved the data issue, but we had to make various adjustments to achieve deep learning that could convert to different voices.

First, we performed data cleansing. The JVS Corpus contains approximately 10,000 audio data. We spent about 20 hours reviewing all these audio waveforms, removing incomplete data, correcting text labels, and aligning punctuation positions.

Next, we explored parameters for successful deep learning. Using the automatic optimization framework Optuna, we conducted experiments for three months using 12 GPUs (total GPU runtime approximately 26,000 hours) to find satisfactory parameters. We explored 10 types of parameters, including learning rate, audio length, number of layers, number of channels, and weight initialization functions. We conducted 1,280 trials for parameter exploration, with each trial taking about a week, but efficiently explored using pruning features.

Through this trial and error, we created a voice conversion system that can transform any voice into 100 voices.

We prepared sample audio converting the author’s voice using the developed voice conversion system. You can compare the original and converted voices.

| Input Audio | Converted Voice 1 (Female Voice JVS010) | Converted Voice 2 (Male Voice JVS041) | Converted Voice 3 (Female Voice JVS058) | Converted Voice 4 (Male Voice JVS021) |

|---|---|---|---|---|

Furthermore, we have released a demo page for this voice conversion system. You can actually convert your voice using a browser that can record audio on both PCs and smartphones. Please give it a try.

I was interested in quality-focused voice conversion, ignoring real-time performance, and successfully completed a demo where voice conversion is possible. This voice conversion system is not yet perfect; for example, it cannot accurately convert sounds not represented by phonemes, such as laughter. In the future, I plan to increase its capabilities while considering potential applications in entertainment.

Thanks to Prof. Takamichi for releasing the JVS Corpus, which enabled us to take on the development of this voice conversion system. Thank you for releasing such fun and inspiring audio data.

[1] Oord, A. V. D., Dieleman, S., Zen, H., Simonyan, K., Vinyals, O., Graves, A., ... and Kavukcuoglu, K.: Wavenet: A generative model for raw audio. arXiv preprint, 2016. arXiv:1609.03499

[2] Kalchbrenner, N., Elsen, E., Simonyan, K., Noury, S., Casagrande, N., Lockhart, E., ... and Kavukcuoglu, K.: Efficient Neural Audio Synthesis, ICML, 2018.

[3] Miyamoto, S., Nose, T., Hiroshiba, K., Odagiri, Y., and Ito, A.: Two-Stage Sequence-to-Sequence Neural Voice Conversion with Low-to-High Definition Spectrogram Mapping, IIH-MSP, 2018. DOI:10.1007/978-3-030-03748-2_16

[4] Takamichi, S., Mitsui, K., Saito, Y., Koriyama, T., Tanji, N., and Saruwatari, H.: JVS corpus: free Japanese multi-speaker voice corpus. arXiv preprint, 2019. arXiv:1908.06248