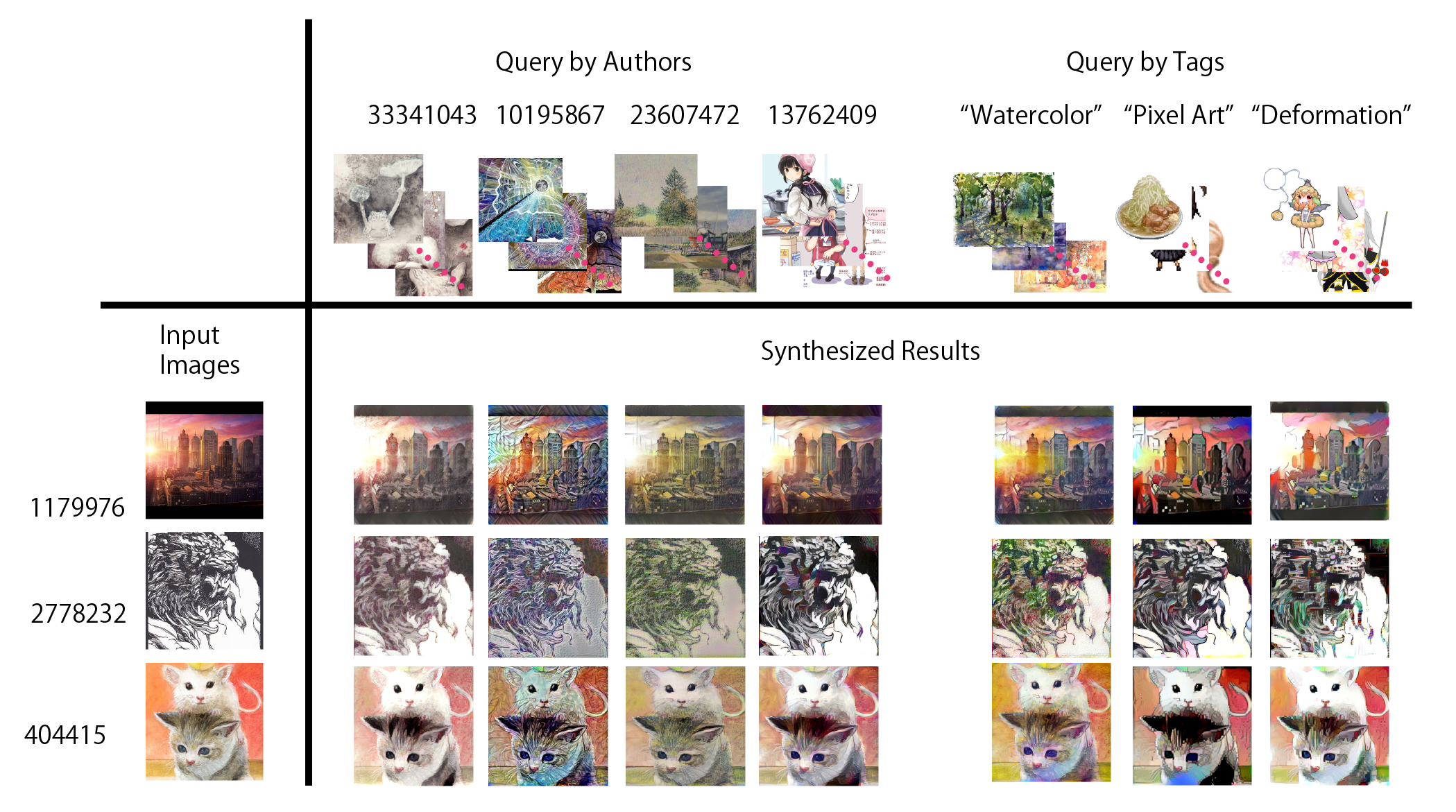

We present an algorithm that learns a desired style of artwork from a collection of images and transfers this style to an arbitrary image. Our method is based on the observation that the style of artwork is not characterized by the features of one work, but rather by the features that commonly appear within a collection of works. To learn such a representation of style, a sufficiently large dataset of images created in the same style is necessary. We present a novel illustration dataset that contains 500,000 images mainly consisting of digital paintings, annotated with rich information such as tags, comments, etc. We utilize a feature space constructed from statistical properties of CNN feature responses, and represent the style as a closed region within the feature space. We present experimental results that show the closed region is capable of synthesizing an appropriate texture that belongs to the desired style, and is capable of transferring the synthesized texture to a given input image.

@inproceedings{ikuta2016,

author = {Ikuta, Hikaru and Ogaki, Keisuke and Odagiri, Yuri},

booktitle = {SIGGRAPH Asia Technical Briefs},

title = {Blending Texture Features from Multiple Reference Images for Style Transfer},

year = {2016}

}