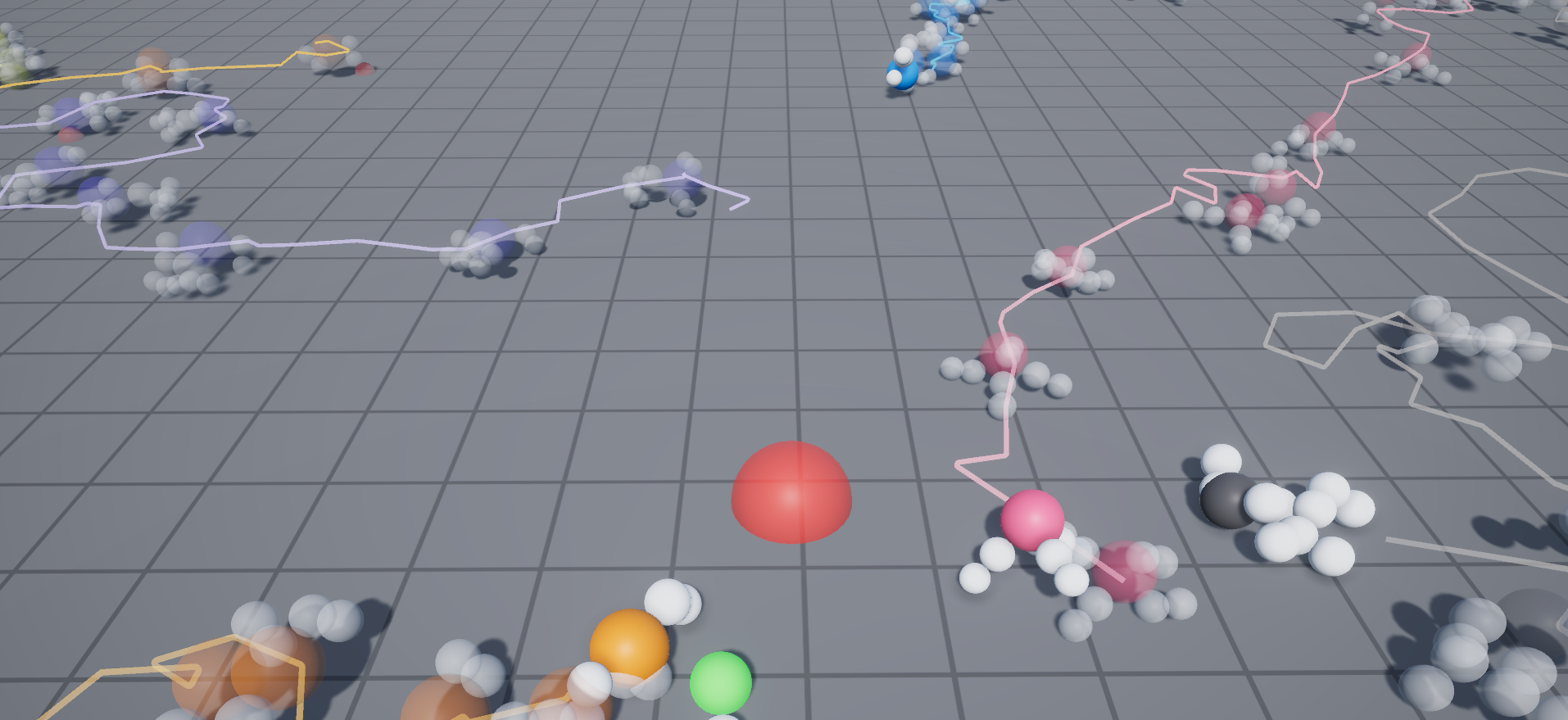

Describing the motions of imaginary original creatures is an essential part of animations and computer games. One approach to generate such motions involves finding an optimal motion for approaching a goal by using the creatures’ body and motor skills. Currently, researchers are employing deep reinforcement learning (DeepRL) to find such optimal motions. Some end-to-end DeepRL approaches learn the policy function, which outputs target pose for each joint according to the environment. In our study, we employed a hierarchical approach with a separate DeepRL decision maker and simple exploration-based sequence maker, and an action token, through which these two layers can communicate. By optimizing these two functions independently, we can achieve a light, fast-learning system available on mobile devices. In addition, we propose another technique to learn the policy at a faster pace with the help of a heuristic rule. By treating the heuristic rule as an additional action token, we can naturally incorporate it via Q-learning. The experimental results show that creatures can achieve better performance with the use of both heuristics and DeepRL than by using them independently.

https://github.com/dwango/RLCreature

https://github.com/dwango/MotionGenerator

https://github.com/dwango/TinyChainerSharp

@inproceedings{ogaki2018,

author = {Keisuke Ogaki and Masayoshi Nakamura},

title = {Real-Time Motion Generation for Imaginary Creatures Using Hierarchical Reinforcement Learning},

booktitle = {ACM SIGGRAPH 2018 Studio},

year = {2018},

publisher = {ACM}

}

[1] Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel Veness, Marc G. Bellemare, Alex Graves, Martin Riedmiller, Andreas K. Fidjeland, Georg Ostrovski, Stig Petersen, Charles BeaÂŁie, Amir Sadik, Ioannis Antonoglou, Helen King, Dharshan Kumaran, Daan Wierstra, Shane Legg, and Demis Hassabis. 2015. Human-level control through deep reinforcement learning. Nature 518 (2015),

[2] Tokui, S., Oono, K., Hido, S. and Clayton, J., Chainer: a Next-Generation Open Source Framework for Deep Learning, Proceedings of Workshop on Machine Learning Systems(LearningSys) in The Twenty-ninth Annual Conference on Neural Information Processing Systems (NIPS), (2015)