This article is automatically translated.

I’m Ikuta (http://woodrush.github.io/), an intern at Dwango Media Village. Dwango Media Village engages in research and development in fields such as machine learning, 3DCG, and computer vision.

At SIGGRAPH Asia 2016 held on December 5-8, 2016, I presented a Technical Brief titled “Blending Texture Features from Multiple Reference Images for Style Transfer.” The implementation code and presentation slides are available in the application case article “Style Transfer Using Multiple Reference Images” on nico-opendata.

This article introduces an overview of the presentation content.

This research extends the study by [Gatys2016] and is a system for “redrawing a given image in the style of another group of images.”

Rewriting an image in a different style is widely demanded in fields such as design. For example, in animation production, background production often involves redrawing actual photos in a style that matches the animation. This process is usually done manually. The goals and features of this research are to:

Specifically, as shown in the figure above:

are input, resulting in:

The main difference from the study by L. A. Gatys, et al. is that the “style is specified not by a single image but by many images belonging to that style.” Using many images as examples of the style enables more natural style transfer. This was verified using a new dataset called the nico-illust dataset. Below, I will introduce the differences from previous studies in detail.

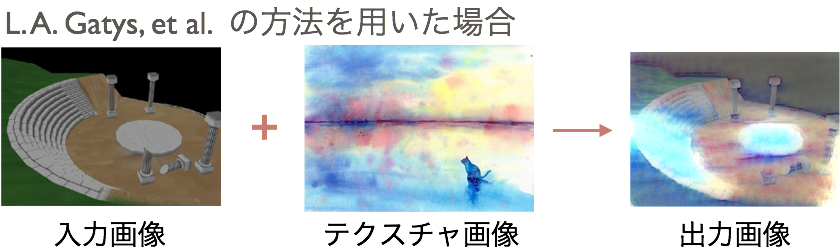

In the prior research by L. A. Gatys, et al., the image used as a reference for style had a property where the color distribution of the transformation result was greatly influenced by the color distribution of the reference image.

The above figure shows an example of attempting “watercolor style” conversion using the method of L. A. Gatys, et al. In this image:

The explanation for this is that “watercolor style” cannot be explained by a single image alone. That is, given only one watercolor style image, it is difficult to distinguish whether its “watercolor style” is due to its texture or color usage.

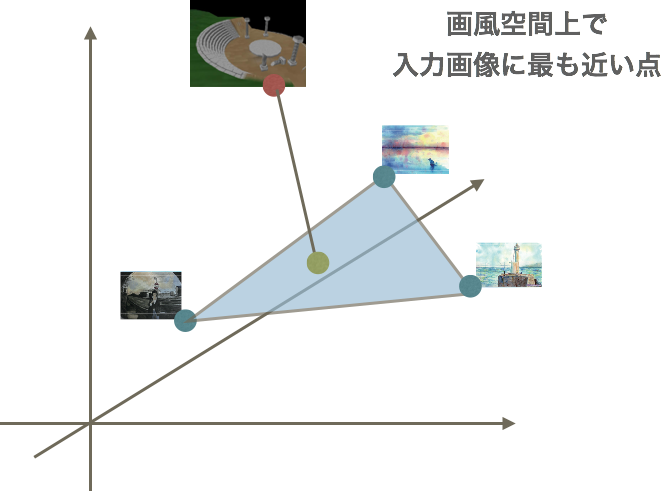

To address this, this research proposes using many images to explain the style. In the method by L. A. Gatys, et al., style transfer uses features including image texture and color information. In this research, these features are called texture features. The method by L. A. Gatys, et al. calculates the texture features of the input image and uses them directly for style transfer. In contrast, this research calculates the texture features of each image in the style image group, blends them to find the optimal texture features for the input image, and uses them for texture transfer. The basis for this operation is:

From these two points, the main claim of this research is that the linear combination of texture features from images belonging to the same style can freely generate texture features representing that style. The set of texture features generated in this way is called the style space. Using this, the method of this research is to:

The following diagram illustrates this process.

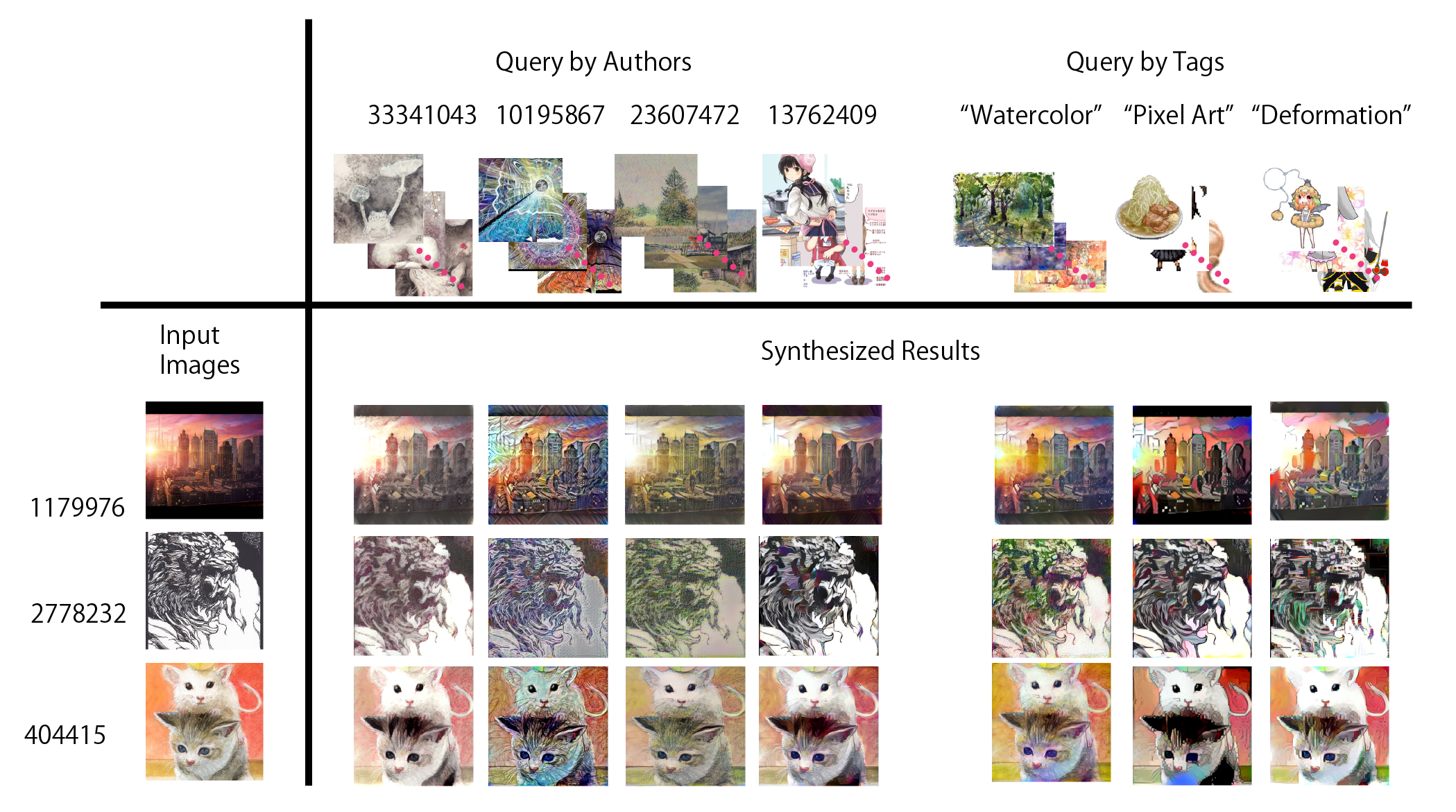

The following diagram shows a list of output results from this research.

This research used the following implementation and execution environment for image generation.

Implementation:

Execution Environment:

Regarding implementation, a pre-trained neural network for image recognition is required. This research used VGG by Simonyan, et al. Additionally, the implementation was referenced from the Preferred Research blog’s article on style transfer algorithms.

The nico-illust dataset, part of the nico-opendata released by Dwango, is a dataset of static images posted on Nico Nico Seiga, containing various information for each image such as:

In this research, tags and author information were particularly used. Tags on Nico Nico Seiga can indicate the style of the image, such as “watercolor” or “pixel art.” This was utilized to query many images drawn in a specific style. Additionally, the author information was used to attempt style transfer of a specific author’s style.

During my internship at Dwango Media Village, I received a lot of assistance, and ultimately, I had the opportunity to compile my results and present them at a conference. I learned a lot and had an enjoyable research and development experience. I would like to take this opportunity to express my gratitude to everyone. Thank you very much.

[Gatys2016] GATYS, L. A., ECKER, A. S., AND BETHGE, M. 2016. Image style transfer using convolutional neural networks. In Proceedings of IEEE Computer Vision and Pattern Recognition. http://ieeexplore.ieee.org/document/7780634/

[Simonyan2015] SIMONYAN, K., AND ZISSERMAN, A. 2015. Very deep convolutional networks for large-scale image recognition. In Proceedings of International Conference on Learning Representations. https://arxiv.org/abs/1409.1556

[PreferredResearch2015] Preferred Research ブログ, 画風を変換するアルゴリズム. https://research.preferred.jp/2015/09/chainer-gogh/